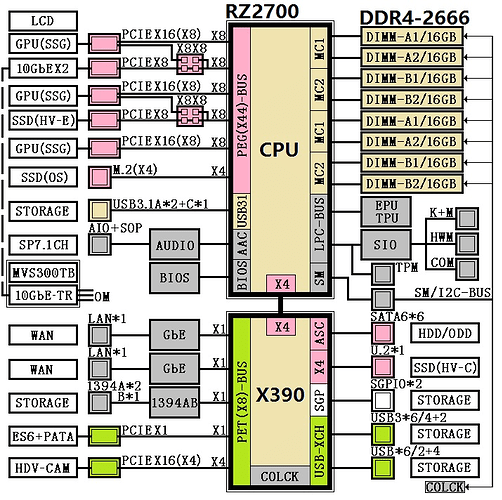

I have an Asus Zenith Extreme Board with Threadripper 1950x (16C/32T), 64 GB of DDR3000.

Based on my CPU and RAM, given what I’ve read I should be able to run 20 plots in parallel, given enough nvme drives.

I installed 4 nvme disks. Two are Intel, on a DIMM by the RAM, and the Samsung nvmes are installed in PCIe ports 2 and 4. Per the manual, neither port shares pci lanes, unless u.2 storage is in use, which it is not.

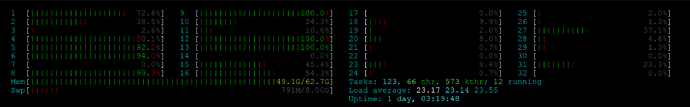

I’m running 17 parallel threads. I use -r 2 and default ram settings. Let’s see far I got after about 11 hours.

Samsung 2 TB nvme

4 Parallel Processes, 1hr stagger:

- Completed, and up to Stage 1 Phase 3:

Computing table 2

Forward propagation table time: 2286.548 seconds. CPU (114.340%) Mon Jun 21 01:29:29 2021 - Starting phase 4/4

- Stage 3: Compressing tables 5 and 6

First computation pass time: 1340.103 seconds. CPU (78.650%) - (forgot to start this one till later) Stage 1: Forward propagation table time: 2071.915 seconds. CPU (123.760%) Mon Jun 21 01:00:37 2021

Computing table 3

4 x Mushkin 480 GB SSD, RAID 0, 4x PCIe 2.0 HBA

4 Parallel processes, 1 hour stagger:

- Stage 3: Total compress table time: 2875.590 seconds. CPU (29.310%) Mon Jun 21 00:43:17 2021

Compressing tables 2 and 3 - Stage 2: Total backpropagation time:: 3196.063 seconds. CPU (29.950%) Mon Jun 21 01:35:57 2021

Backpropagating on table 2 - Stage 2: Total backpropagation time:: 3254.953 seconds. CPU (33.220%) Mon Jun 21 01:18:43 2021

Backpropagating on table 5 - Stage 2: Total backpropagation time:: 1991.244 seconds. CPU (21.580%) Mon Jun 21 01:34:53 2021

Backpropagating on table 6

Intel nvme #1

3 parallel processes, 1hr stagger:

- Stage 2: Forward propagation table time: 10542.896 seconds. CPU (32.580%) Mon Jun 21 01:31:50 2021

Computing table 6 - Stage 2: Forward propagation table time: 9100.620 seconds. CPU (38.460%) Sun Jun 20 23:29:54 2021

Computing table 5 - (Forgot to start this one till later): Stage 1: F1 complete, time: 1288.21 seconds. CPU (15.69%) Mon Jun 21 00:46:49 2021

Computing table 2

Intel nvme #2

3 parallel processes, 1hr stagger:

- (This one was paused for about 30 min) Stage 2: Total backpropagation time:: 2978.436 seconds. CPU (15.070%) Sun Jun 20 23:51:19 2021

Backpropagating on table 6 - (This one was paused for about 30 min) Stage 1:

Forward propagation table time: 5413.073 seconds. CPU (60.530%) Sun Jun 20 23:30:43 2021

Computing table 7 - (Started this one after pausing others) Stage 1: Computing table 2

Forward propagation table time: 2914.677 seconds. CPU (91.380%) Mon Jun 21 01:46:54 2021 (not bad so far)

Samsung 1TB nvme

3 parallel processes, 1hr stagger:

-

Stage 1: Forward propagation table time: 8107.955 seconds. CPU (41.920%) Sun Jun 20 23:51:51 2021

Computing table 6 -

Stage 1: Forward propagation table time: 9605.114 seconds. CPU (36.580%) Sun Jun 20 23:55:08 2021

Computing table 5 -

Stage 1: Computing table 4

Forward propagation table time: 9866.944 seconds. CPU (35.310%) Mon Jun 21 01:10:37 2021

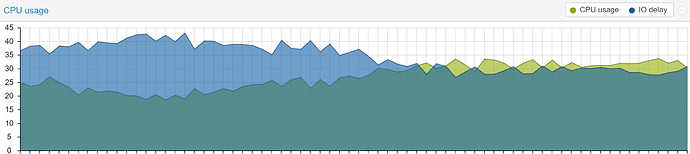

So, as you can see, my 2tb nvme, running more threads, has completed an entire plot before the 1tb nvme from the same make and similar model (1 year newer) struggles to get out of stage 1.

I think this has something to do with how linux is scheduling processor time. Notice on one of the intel nvmes, even with another 14 process running, when I paused the two running processes and started a third on that disk, that third process actually started running alone at a pretty respectable rate, despite being the 15th out 15 threads at the time I started it:

Stage 1 Table 2:

Forward propagation table time: 2914.677 seconds. CPU (91.380%) Mon Jun 21 01:46:54 2021

Compare that with the samsung 1tb for the same phase, with one other parallel process on that storage, and only 9 parallel processes over all:

Computing table 2

Forward propagation table time: 7332.526 seconds. CPU (40.050%) Sun Jun 20 19:20:28 2021

That is some crap time.

Finally, here is the 2tb samsung, with 17 parallel processes overall, and another three on that disk, running, on the second pass:

Computing table 2

Forward propagation table time: 2286.548 seconds. CPU (114.340%) Mon Jun 21 01:29:29 2021

Wtf? Why does linux give some processes consistently more cpu time than others, for no apparent reason?