I’m trying to plot on a 4core / 8 thread laptop with an nvme through the laptop’s USB-C port. I know this is a low performance setup but this is just an experiment with this laptop to see if upgrading its RAM is worth it. Called plots create with default arguments (1 plot, 2 cores) but changed 2800 for buffer since all the laptop has is 8gb RAM currently and higher values were killing the plotting in under 1 minute. The environment is Mx Linux.

Very few external USB NVME adapters can support the intense I/O necessary to create plots. I only found one controller chipset that worked, the ASM2364:

Equipped with ORICO’s latest USB3.2 Gen2 main control chip ASM2364, which supports up to 20Gbps transmission bandwidth,uses PCIe 3.0 x4 interface with a theoretical bandwidth of 4GB/s, fully meets the bandwidth requirements of USB 3.2 20Gbps (2.5GB/s) and support NVMe

The others would either fail on a single plot, or fail during two simultaneous plots.

So it’s likely the controller chipset.

Thanks for the reply. The one I have is also a USB 3.2:

Sabrent USB 3.2 Type-C Tool-Free Enclosure for M.2 PCIe NVMe and SATA SSDs (EC-SNVE).

How fast it crashes seems to be affected by the amount of buffer I assign though. I was able to get access through the SATA port for a drive. The laptop restriction seems to bee only for booting. Will try through the SATA port and later with the nvme adapter again when I get some more RAM I ordered anyways.

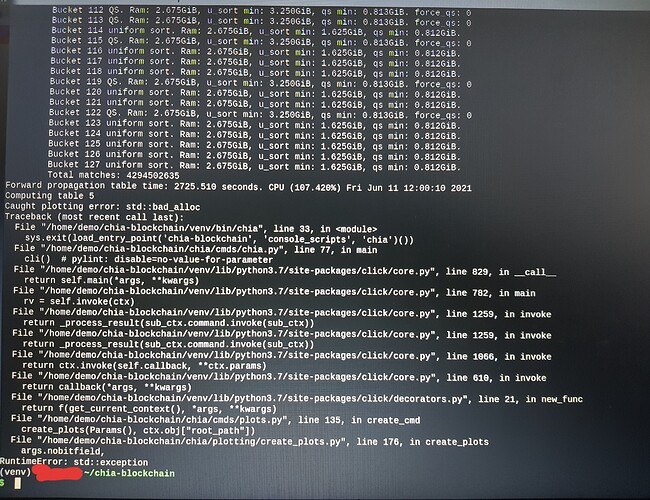

IT’s right there in your dump std::bad_alloc means it doesn’t have enough memory. It tried to allocate memory and the allocation attempt failed (there are other types of failures that might produce this exception but are very unlikely on production code). This is likely the result of trying to take the buffer space smaller than the default value. While the default probably has a small amount of margin I doubt it has as much as you removed by taking it down to 2800. The amount of memory needed is determined by the plot type (k32 min) and the number of buckets (128 default). Based on the amount of memory available it will choose between two different sorting algorithms. If there is not enough memory for the more memory efficient algorithm then you are SOL and it will fail like it did for you.

With only 8 GB of RAM you only have enough to run one plot at a time unless you have this stripped down to a bare minimum config linux setup. Just a basic server setup that doesn’t require much memory.

Speculating a bit - have you tried disabling the bitfield option? Using the bitfield instructions may be forcing some memory alignment/pagesize requirements that cant be met in your configuration. This is just a guess, I haven’t spent much time looking into the bitfield instructions being used by chia plotter.

Awesome. I got a 16GB RAM card on the way. I was mostly suspecting the small amount of RAM and your input sounds on point. I used “free” to see how much ram I had available and it said I had around 6GB (including cache and buffer which the OS is supposed to give back in case a process needs it)so I didn’t think the default buffer size would be a problem. As soon as I turned it down to 2800 it seemed to work but it just took longer to fail. I should have my laptop with more memory soon, thanks!

I haven’t touched the bitfield parameter. I’m still a noob in this and all I know about that is that one bitfield setting uses 4GB and the other 6GB. For this low power setup; do you recommend experimenting with that setting?

So if you run it with default values it fails? Does this failure have the same std::bad_alloc message? With 6 GB free you should be able to get one plot to run with default values. Do you have swap disabled possibly?

I think I have swap disabled indeed.