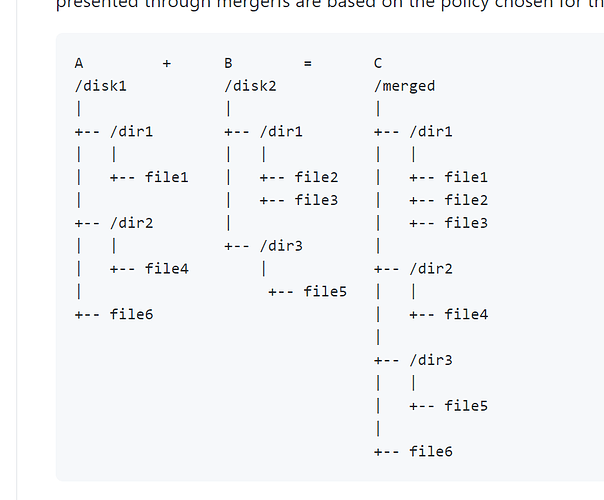

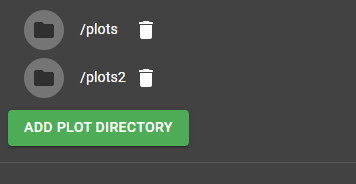

Well, my JBOD noob level has presented itself. I have extensive experience in all sorts of RAID in very large enterprise environments. But in that field, you just don’t play with JBOD. I understood the concept, but never implemented it. So when I setup my first 2 NAS systems with it, I setup one JBOD storage pool with all the drives in it and then one volume spanning all drives in that pool. I assumed that the JBOD setting meant the system would see they are separate drives and treat them that way. NOPE. I had this nagging feeling about the setup (screaming SOMETHING IS WRONG). Fortunately I had a 3rd NAS that is not in service yet. So, I tested it. Set it up the same way, dropped some files on the share and pulled a drive. CRASH. Data gone. Well, that wasn’t what I was expecting but what I feared. So, I have setup that NAS (has 8 drives) with 8 separate storage pools, each pool has a volume and each volume has a share. Now I can map to each share and know that it is an actual physical drive. Now if a drive dies, I lose that drive, nothing else. The issue now is that I have to move all of my plots from the misconfigured devices to this new one so I can rebuild those.

Is my new approach correct? Or did I just do something wrong in the original configuration that would have made that setup work properly? Hopefully what I am doing now is right and this is just a warning for everyone to do it right.