What is the brand of your NVMes?

Since your CPU has 12 physical / 24 logical cores, and looks like you dedicated that box to MadMax, you may want to set -r value to 24 or 25. I don’t think that MM is really respecting those values (i.e., grabs more logical cores than it is specified), but an explicit selection usually helps a bit in case it hesitates.

I think that you made a mistake, and specified both t1 and t2 to be /media/ss2. This may be the biggest slowdown.

I would also start with both -v and -u be 256 (or what looks nicer - 8).

I am not sure, but I think that the value:

[P1] Table 1 took 16.0261 sec

more or less represents pure CPU capabilities (and it is in a good range). However,

Phase 1 took 2678.31 sec

shows temp2 performance and it is slow. During that phase t1 is not that much used, but still may be slowing down t2, as in that run they are the same.

Also, the fourth phase:

Phase 4 took 55.906 sec

reflects mostly temp1 performance, and it is in a good range.

Also, depending how many plots you would like to get at the end of the day, I would suggest to upgrade your RAM to 128 using it as temp2, and run your NVMes in RAID0 as temp1. MM is not using that much RAM by itself, so anything above 16GB is not needed. Although, if you have 64, in Windows you can use PrimoCache and ask it to never write data to SSDs, what is a good use of your RAM above that 16 GB and saves your NVMes a little. With 128 GB, you don’t need PrimoCache, as you will use 110 GB RAM drive for t2 (saves you about 75% on life span of your NVMes) - this applies to both Win and Linux.

Update:

Sorry, I misread your MM command. You have plenty of room on your NVMes, so there is no need to specify just one as a working one, but the other as pure destination. You really slow down the working one, but underuse the destination. I would suggest that you run it like:

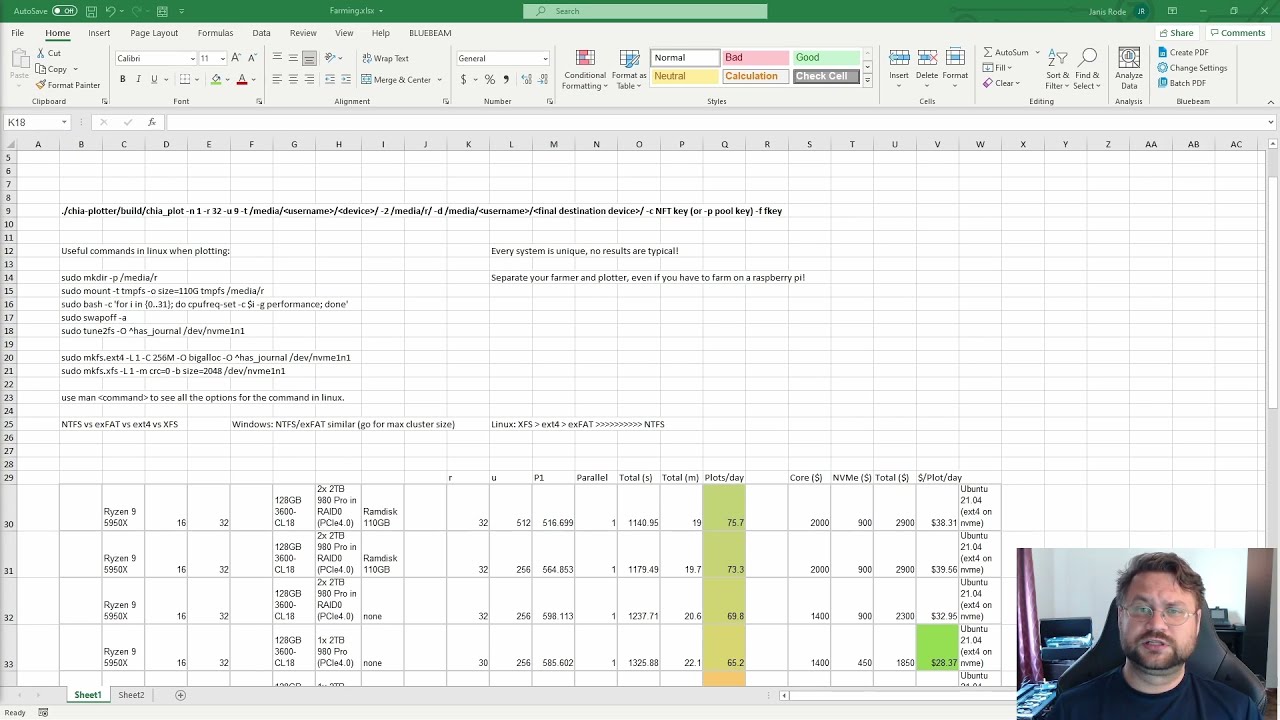

-n 2 -r 24 -v 8 -u 8 -t /media/ssd1/tmp -2 /media/ssd2/tmp -d /media/ssd1/xfr -f xxxxxxxx -c xxxxxxxxxxxxxxx

You can store about 15 final plots on ssd1 before MM will start barfing. I assume that you still need to xfr those plots to some destination HD, though (manually or via some script), and that will keep your MM running.

If for now you want to run MM as in that line I gave you, you may also use -G flag. That will balance t1 and t2 SSDs, so they will be wearing off at the same rate.

By the way, if your box is just setup to plot, upgrade to Ubuntu 21.04. Unless you have some specific needs, there is no point to stick with 20.04.