You don’t need chia to run MM. You only need your f/c/p keys. So, when you build it, you can move around that binary to whatever place it is convenient for you.

I am not saying that MM provided by chia is borked, rather that I have basically zero confidence in chia providing sound / tested code. Also, I see no reason to install chia, if MM works by itself. All eventual MM updates will end up in MM github one, maybe not or late in chia. Saying that, I have never had a need to run MM they provide.

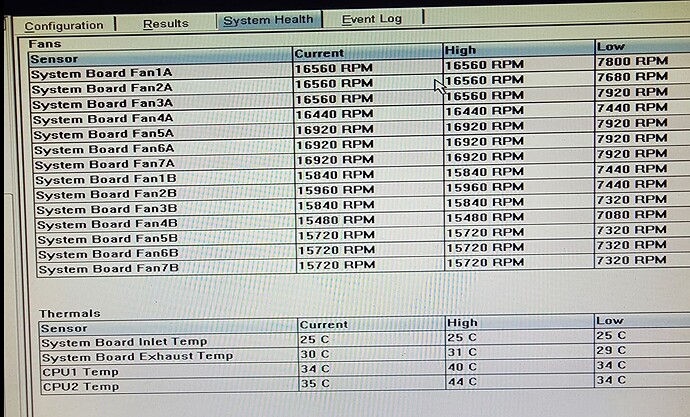

For time being, I would just make one RAID0 array with as many SAS drives you have / can connect to your box and would not use t2 (for now). RAID0 with 2 SAS drives is really slow, so combining those two RAIDs into just one may (or may not to) provide a small improvements.

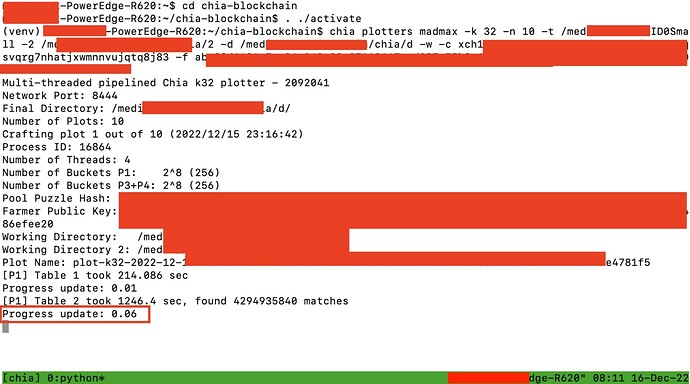

What you posted (200 sec for Table 1) is 20x slower than one instance on my box. Sure, I have 2695 v2 with 12 phys cores, and I run MM completely from RAM. If you control your box, you should see about 30-35 mins / instance for k32 plots (making all possible upgrades).

Just to get it going, I would avoid using for time being virtualization, and potentially disable the second CPU. MM may have problems with spanning across 2 CPUs (thus NUMAclt is needed).

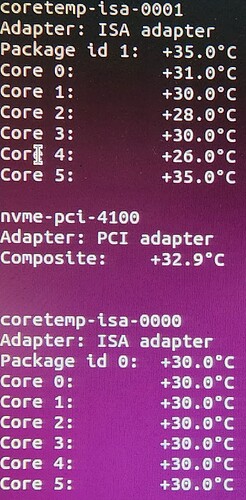

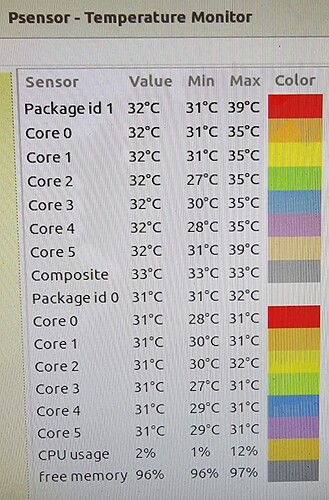

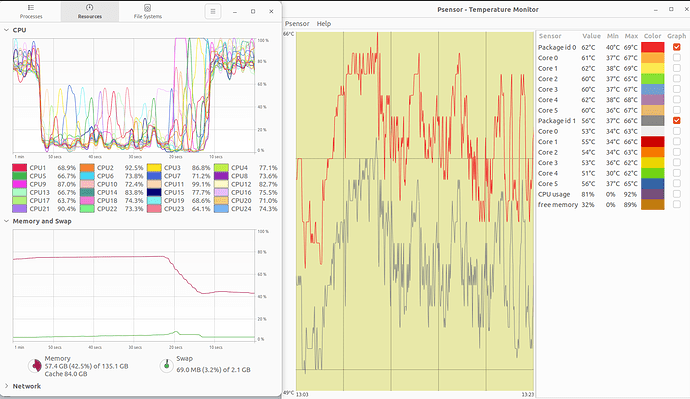

What results you have from hdparm when testing your RAID arrays? Plese, provide a snapshot of resource monitor with both screens: CPU (middle) and processes (left), where write speeds are visible. Also, run sensors, and provide output here.

Actually, have you checked either message / syslog or journalctl for potential warnings / errors?

You can also install bpytop, as it gives more info (e.g., CPU / core temps, disk IO, …).