Are you going to mod the servers for lower noise emissions? That’s a big issue I’m expecting with buying and running an actual chia server.

Take a look at that thread:

That farmer/harvester was a very strong/clean setup with locally attached 500TB disk space. For whatever reason, Chia node couldn’t handle that load. Changing to Flex fixed the problem. And no, that was not dust storm related.

I would assume that with that amount of disk space you will be doing self-pooling, as such may not have the same feedback as pools provide (e.g., everything looks cool locally, still no wins).

Still, it would be nice to see, how much you can push it.

They are definitely loud! I was running them in my laundry room, but I’ve already run out of space in my 27U rack so I had to get a full 42U rack for the garage (wouldn’t fit in laundry room). I also installed 2 more 240v plugs in my garage under the breaker box to run everything. So noise won’t be as big of an issue for me since they are out of my living space now.

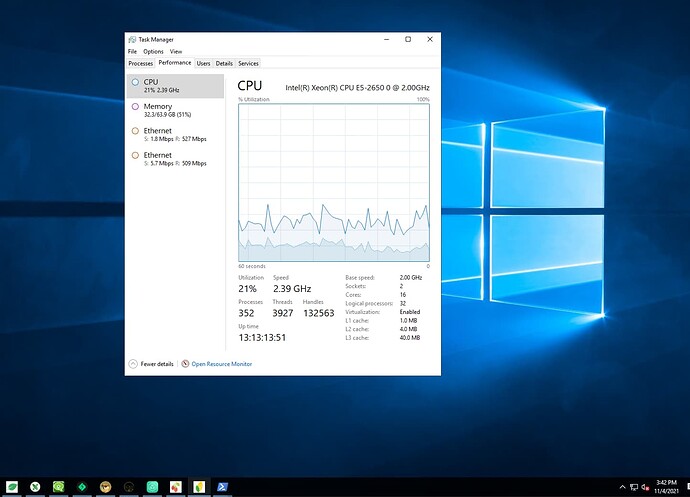

I’m not sure of the specifics of his setup or why he’d be having problems, but I’ve had no issues. I’m up to about 435tb in plots so far and adding another hundred or so a day. When I first set everything up and copied plots from drive to drive, I could get 8 file copies going at once, each with over 75MB/sec transfer. I’m using an old 8 lane 6gbps LSI SAS card in a Dell r720 with dual E5-2650’s, so 16 cores total. Only 64gb of RAM. I’m also running 8 forks alongside Chia. My lookup times are all sub-second. I’m actually looking at moving to an even older Dell r610 just because I think the r720 is overpowered for a farmer. The CPUs just twiddle their thumbs. ![]()

Liveshot:

The power consumption for your mb+ is mostly CPU and SAS/SATA controllers. Everything else is kind of in the same ballpark across different platforms. Maybe one option would be to switch to E5-2650L v2? It is 10 cores processor, but with a lower clock, thus draws a tad less power.

Are you plotting with them too or just harvesting? Are you still trying to run Storj and/or other storage networks in parallel?

Have the Chia forks paid out so far?

Just full nodes/farming/harvesting, no plotting. Although I have 3 other plotters that are constantly transferring plots over the network - you’ll notice the network traffic in the screenshot above showing an incoming plot.

Yep, still running Storj as well! Screenshot taken while Storj running in background.

I haven’t traded or sold anything yet - everything just goes to cold wallets. The forks might not ever be worth anything but I figure I might as well farm them since I have plenty of spare CPU cycles…

That washer dryer hookup thou

It’s impressive setup.

Can you guide me with something. Why are you running 16 vm for each forks ? I thought that we can run all the forks from single os setup or machine.

Yes, you certainly can run all forks from a single machine. Originally I was doing this for security - this was back when forks first came out and so I was concerned about running them on the same machine as my main farmer. I don’t run separate VMs anymore. Here are my latest updates: https://chiaforum.com/t/the-journey-to-one-petabyte/

Sorry to hijack or dig up this older thread, but I stumbled upon that discussion.

@enderTown since you have a lot of experience with those JMB585 Sata controllers on PCIe to Sata adaptors, as well as JMB575 1-to-5 Sata multiplier cards.

I recently bought the latter (ADP6ST0-J02 acc. to the PCB). I hooked it up to a (sacrificial  ) test system with just one old 500GB spare Sata drive at first to check whether it is working at all. I noticed right away that it got significantly hot to the touch. The tiny headspreader is glued on securely so I can’t easily check which chip is actually used. I assume JMB575. At least that’s what the box and description say.

) test system with just one old 500GB spare Sata drive at first to check whether it is working at all. I noticed right away that it got significantly hot to the touch. The tiny headspreader is glued on securely so I can’t easily check which chip is actually used. I assume JMB575. At least that’s what the box and description say.

Is it normal that those multipliers get that hot?

Did you hook them up via the 15 pin Sata connector or USB?

I then connected a secondary drive, but it wasn’t recognized in Windows. Any idea what I am doing wrong?

Thanks a lot in advance!

Those look to be the newer versions of the ones I have - mine don’t have SATA power connectors on them if you look at pics above and they also don’t have heat spreaders. I never noticed the chips getting very hot. I bet those newer versions are faster though!

As for the second drive not working, that usually means that your host SATA connection doesn’t support port multipliers. The first one is just passed through but the other 4 ports won’t work. Are you hooking it straight to the SATA ports on motherboard? Most motherboards don’t support PMs - you’ll need to get an add-in PCI card that supports them. See above for examples of those too!

Actually, some time ago, I purchased one such multiplier as well. My server (TYAN S5512 / Xeon e3-1220L v2 - yes, it is 2 cores only, as it is a harvester) was running Win 10 Pro, and as you stated just one port was functional. However, when I installed Linux on that box, all was fine - all ports started to work. So, maybe Linux is more forgiving, and compensates better than Windows.

Hello @enderTown and Fellow miners!

Id have a question to those 12v to 5 volt converters:

10 Harddisks are connected in parallel to one 12->5v converter and to the 12 v of the psu.

At the same Time, the converters are connected in parallel to the psu. Is that correct?

Yep that’s right!

Thank you for your reply! So I received a PCIe multiplier card or to be precise an m.2 to 5x SATA card, which uses the JMB585 chip, which supposedly supports FIS-based switching. If I connect the aforementioned port multiplier (JMB575 based) to it, Windows still will only recognize one drive.

What is odd. It seems to randomly pick one of the (currently) two drives connected for testing purposes.

Are there drivers needed for Windows?

EDIT:

Yes, but it is hard to get decent information from AliExpress vendors.

I did a lot of trial and error searching for JMB585 bridge drivers, as well as JMB575 pm drivers and found that for me using the following worked: 10 Port SATA III to PCIe 3.0 x1 NON-RAID Expansion Card

Interesting thread when ready when Chia at around $40 in comparison to when posts were made here. I’m somewhat in the same boat where the higher Chia price was about 50% higher than it is now.

Not sure if the OP is around or cares at this point, but I would be curious. SATA isn’t designed for what you initially designed. I think you discovered that fact because of the issues that arose. Am I right?

In other words, SAS, being a scalable storage environment, is designed for your initial design. So the issue about startup, the HBAs and drives can and do deal with that. They are designed for that. SATA are not. When it comes to cabling and adapters, the server drives have massive expandability. HBAs and cable options for connecting many, many drives.

So please tell me if I’m wrong, but did the initial plan simply fail because of choosing SATA vs. SAS?

As for the vibration issues, I would suggest that Wal Mart anything is trash quality. If you want sturdy, solid, less chance of vibration issues, then going to a food service supplier to buy a solid rack is the way to go. You won’t get the number of racks, but there are other ways of hanging drives. I would just say this to anyone. Buy the heavier rack. Shop by weight, not by price. It might look the same, but it will be shit quality. Lighter is less expensive to manufacture and less money to transport. Make it look legit, the Wal Mart way.

I’m with you on paying the least amount per TB possible, although you mentioned going to bigger size drives. With a 50% reduced value of XCH now, do you stand by that choice or was your original plan with smaller drives a better way to go? Yes, I understand your adapter, cable issues, but that’s not my point. If you could have bought sas, and built this out like an open air server, taking into account HBA and cables, and still pay far less than big hard drives, would you rethink things?

I can imagine that the experience and issues might cloud your perspective which is understandable. And you may not know the world of sas, HBAs and cables.

Vibration is an interesting aspect to this. I will say one thing. Metal doesn’t bend/break. For these purposes, metal vs. plastic is no comparison. I do not hang anything off anything plastic. I’m just not sure if the sas drives can manage vibrations better. They are far more robust so perhaps less of an issue. A server rack has what anti-vibration measures in place? When we’re talking SATA though, I would say all bets are off. I’m not an expert on this aspect but vibration = bad any way you slice it. In that sense I’m not mixing plotting with farming on the same unit. If farming requires little hard drive movement then I don’t see an issue. I don’t plot with SSD for those that care. It’s SAS or nothing.

Anyways I appreciate the thread you started and sharing your trials and tribulations. I do wonder though how you view things now through the lens of a 50% less valuable XCH. That is, if you are still actively farming Chia.

Yep! See this thread for the continuation and updates: The Journey to One Petabyte - Chia Farming & Harvesting - Chia Forum

Nope! I just got tired of LARPing as an enterprise JBOD designer ![]()

Agreed! I didn’t have any vibration issues but I do like the heavier racks and JBODs now that I have them (see updated thread)

If your goal is cheapest $/TB, smaller drives are way to go. If you have cheap electricity and plenty of connection points (SAS or SATA) then it makes sense. Any drive uses $7-10/year in electricity at 10-12c/kwh. For the smallest drives, you are paying maybe $7-8/TB if you buy in bulk or find good deals. But my target for cost per drive for connections (SAS JBODs in my case) is about $10. So if you just plan on running them for a few years and then upgrading, smaller drives might make sense over larger drives, especially if you already have the connections. If you are out of connection points, buying larger drives might make sense so you don’t have to buy as many more connections (SAS/HBA/JBOD/etc). ![]()

YES! See more details in continuation thread above.

Yep! And growing. Check out me and Jerod (Digital Spaceport) unloading our latest auction win here:

Digital Spaceport Storage Wars - The Breakdown - YouTube

Digital Spaceport Server Wars Final Analysis - With Josh Painter (Endertown, Catmos) - YouTube

If you are scaling up to petabytes, you’ll want to stop buying retail (or even ebay) and start looking for local/state auction deals on lots of server equipment!

Hope that helps, good luck!

Hey thank you for the great reply. Amazing contributions. I will check your other post. I’ve watched the Spaceport videos and had no idea that was you.

Just a quick note from what you’ve said. My understanding is sas are more tuned to handle slightly warmer temps. I also know sas are far more robust. I guess in the next couple years we will see how retail drives, especially the external ones hold up. If my sas drives last forever, then that makes up for some added power costs.

I’ve done my numbers to the bone. I add cable and HBA costs, on a per drive basis. I can’t come close on any other storage solution. I get that everyone’s mileage will vary. I can get prices where this cannot be touched. Believe me I am very thorough on researching and trying to find sources.

JBODs are in my future, just not right now. I can only invest in hardware expenses that will directly increase my yield. The server gear is industry proven but I need to get returns on investment before I step up into that realm. I also want to show newcomers the most economical way to enter this space.

Thanks again for all your sharing of insights and experience.

The DC/DC converters work really well, but I’m experiencing an issue with the cables/connectors heating up.

Are your connectors on the breakout board hot when you connect 10 HDDs on a single cable?

I’ve tried using a regular PC 750W modular PSU (with GPU cable port), but the connectors become uncomfortably hot. I suspect that my PSU cables simply isn’t capable of handling these DC/DC converters. It says that are 18 AWG.

I attempted to connect 10 drives, but it seems that may be too much due to the heating issue. Connecting 5 drives per single 12V PSU cable seems to be okay.

Do you recommend connecting 10 or 5 HDDs per single DC/DC converter/cable when utilizing a server PSU with a breakout board?

Thank you for your assistance.