A post was merged into an existing topic: Prevent External USB HDD Disk Sleeping

Thank you for your answer, I hope you solved your problem.

I still find it odd this is happening.

How much data is exchanged for proofing? I didn’t think it was much at all ?

Does anyone use a linux setup for harvesting and have this issue? Remember there is a performance hit on plotting so harvesting might also be slower.

I use a synology nas forplot storage and haven’t see the issue but I am no where near the amount of proofs so I can’t tell if I have this issue. Right now it’s subsecond responses.

Updated with overnight harvester times:

-

HTPC Phantom Canyon, all USB connected drives plus 3 network shares that map to 18tb WD elements dump drives

- avg 1.04s

- median 0.45

- max 42s

- min 0.04

-

2values over 30s

- avg 3.7s

- median 0.64s

- max 150s

- min 0.11s

-

243/ 8361 values over 30s

- avg 8.29s

- median 4.2s

- max 265.4s

- min 0.24

-

325/ 8242 values over 30s

Doesn’t look like setting “write to these disks to keep them awake” helped much.

The good news is that all the USB drives attached to the Ryzen and the HTPC have been migrated to the server JBOD where I think they will do very well on response times. So that part is over.

The bad news is that, after 3 days of analysis and tweaking, I think NASes, unless you run local harvesters on them, are basically dead for the purposes of Chia. It’s not too bad, 325 out of 8242 is only 4% of requests timing out and reaching the 30 second lmit – but I also saw 3% of requests time out with a ton of USB attached drives. The real story is the median times though. A median of 4.2 seconds via the NASes is much worse than a median of under a second!

And I still have two fairly full NAS’es to migrate data off of… but I’m not giving up! I will get the data off these NASes!

Same results as before (see above for system descriptions), but I’ve gotten it all down to excel / Google Sheets values so I can paste it in real quick:

| intel | htpc | amd | datacenter JBOD | |

|---|---|---|---|---|

| avg | 14.85 | 0.92 | 1.49 | 0.61 |

| median | 3.97 | 0.06 | 0.27 | 0.47 |

| min | 0.22 | 0.00 | 0.03 | 0.27 |

| max | 76.4 | 83.8 | 5.6 | |

| over 30s | 10 | 42 | 0 | |

| total checks | 5896 | 1472 | 6499 | 1303 |

| percent over 30s | 0.7% | 0.6% | 0.0% |

This is with a far more limited number of plots on the htpc and AMD side; most of those drives have been migrated to the datacenter JBOD – which I’ve added as a column on the right.

This is still with two relatively full 90tb NAS’es under the “intel” column. Just to confirm @farmerfm how many over 30 seconds harvester responses do you see when running the harvester directly on the NAS?

I have bad news for the Chia team.

Even with a very sophisticated FAST JBOD setup, you will see response times over 5 seconds at times. So whoever it was on the Chia team that came up with that “if it’s over 5 seconds, you have a bad config!” rule is… kind of huffing glue? We’re dealing with hard drives here. Sometimes things are gonna happen, and 5 seconds is a pretty rough level to make a warning, and 30 seconds… basically isn’t enough time to account for hard drive variability on large farms.

I expect to see a LOT of people making a LOT of noise about the soft 5 second and hard 30 second harvester rules as these plot farms grow indefinitely.

This is awesome data Jeff!! Thanks for all the work in collecting and analyzing it!!

Since I’ve set up the remote harvesters, there hasn’t been a single response time over 2 seconds, let alone 30. Caveat: the biggest individual harvester I have is 400 plots atm, and it’s only been running for ~36 hours.

If there’s some script you’d like me to run on my logs, happy to do that.

Just gonna throw this out there cause I’m curious if ANY changes will occur due to this

Has anyone tried changing the block/cluster size of the drives from the 4kb default to something larger, like 64k or even 2mb for the lols?

I’m no computer expert, but I do have terabytes of interpolated high res videos on slow wheezing 5400rpm drives which jitter and cry when I’m getting multiple machines to access them all at once with the default block/cluster size, but are fine when it’s manually set higher

Again, no computer expert, but it helped my seek times

I used default ext4 when setting 'em up. I don’t think block size was even an option; you had the choice of btrfs or ext4 and I reflexively went with the older, more mature filesystem.

I messed around with allocation size a bit, I did see a plot speed improvement. A few minutes. Benchmarking Different Settings for K32 – The Chia Farmer

Today’s data on harvester times! See earlier posts for explanations

| intel / nas | htpc | amd | datacenter JBOD | |

|---|---|---|---|---|

| avg | 3.59 | 1.59 | 0.48 | 0.61 |

| median | 3.51 | 0.12 | 0.13 | 0.47 |

| min | 0.16 | 0.02 | 0.02 | 0.27 |

| max | 8.6 | 5.6 | ||

| over 30s | 11 | 13 | 0 | 0 |

| total | 3956 | 1526 | 4383 | 1303 |

| percent over 30s | 0.3% | 0.9% | 0.0% | 0.0% |

Definitely improving as I move things off the NAS. I do think the dev team’s idea that proofs should never be over 5 seconds is a bit of a fantasy as your farm grows… spinny rust hard drives don’t always like to play nice, especially when you have vast numbers of them in use.

One thing to note is that intense copying of files will affect your proof times. I’m not sure how to solve this if you want the drive with a bunch of plots to stay online while you are filling it… if you are willing to keep the drive offline until it is full, then no issues.

My guess is it worked fine in testnet with k25 (600 MB) plots

I have only 53 plots and today i found in my logs :

> 2021-05-05T17:28:47.155 harvester chia.harvester.harvester: INFO 1 plots were eligible for farming 01cdc7e639... Found 0 proofs. Time: 12.82601 s. Total 53 plots

> 2021-05-05T17:28:51.560 harvester chia.harvester.harvester: INFO 0 plots were eligible for farming 01cdc7e639... Found 0 proofs. Time: 0.00128 s. Total 53 plots

> 2021-05-05T17:29:00.311 harvester chia.harvester.harvester: INFO 1 plots were eligible for farming 905130b69d... Found 0 proofs. Time: 0.18622 s. Total 53 plots

> 2021-05-05T17:29:10.432 harvester chia.harvester.harvester: INFO 1 plots were eligible for farming 905130b69d... Found 0 proofs. Time: 0.06443 s. Total 53 plots

> 2021-05-05T17:29:18.068 harvester chia.harvester.harvester: INFO 0 plots were eligible for farming 905130b69d... Found 0 proofs. Time: 0.00141 s. Total 53 plots

> 2021-05-05T17:29:27.697 harvester chia.harvester.harvester: INFO 0 plots were eligible for farming 905130b69d... Found 0 proofs. Time: 0.00157 s. Total 53 plots

> 2021-05-05T17:29:37.865 harvester chia.harvester.harvester: INFO 0 plots were eligible for farming 905130b69d... Found 0 proofs. Time: 0.00165 s. Total 53 plots

> 2021-05-05T17:29:45.711 harvester chia.harvester.harvester: INFO 1 plots were eligible for farming 905130b69d... Found 0 proofs. Time: 0.07781 s. Total 53 plots

and

> 021-05-05T17:28:47.155 harvester chia.harvester.harvester: WARNING Looking up qualities on /.plot took: 12.825770854949951. This should be below 5 seconds to minimize risk of losing rewards.

I have few this same warning, and i don’t know why i have this 12s with 53 plots and internal disc connected via sata

Could be many reasons, could be random disk issues, could be you were copying a bunch of files to that disk, who knows… as long as it is under 30 seconds you are OK. That’s the hard cutoff.

What kind of network was this on. What kind of settings for power on nas? what kind of spin up times on disks? I dont want to give up on my qnap 653Ds as I have 4 of them.

Was thinking of going infiniband network as it has nanosecond latency if that could help. Can get some cheap industrial routers for 150 usd or so and 40gb network and get some used mellanox connect x 3 infiniband cards for also nanosecond latency to the computers/nas’es.

The network info is in the topic…

TL;DR avoid ANY kind of complexity; directly connect the drives in the simplest possible way with the fewest layers between the OS and the disks.

Keep it dumb and simple. PREACH IT! ![]()

I had similar issue with 2000+ plots, lots of warning about lookup time > 5sec. My setup consists of x nr of plotters and a full node, each plotter have multiple external USB HDD.

I have a script that writes to the external drives every 20sec to keep them from falling a sleep, that does not help with lookup times, I don’t think it reads from the drive unless harvester finds something.

In my case I determent that its was number of plots per harvester that was the problem. I found the github wiki tutorial about “Farming-on-many-machines” witch helped to setup harvesters and spread the lookup. I installed harvesters on all my plotters and now I have 2000+ plots on spread out over multiple machines, with a average response time around 23ms.

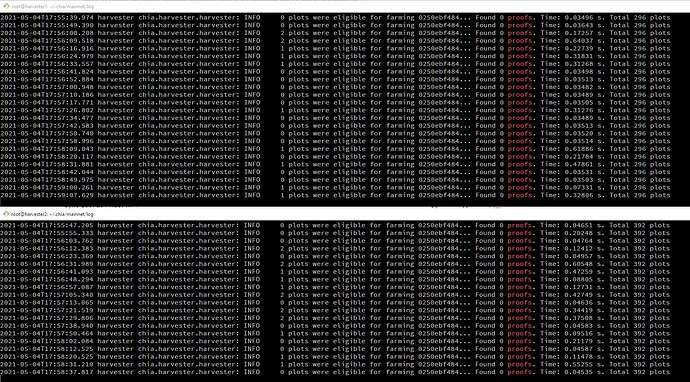

Note! In Farming-on-many-machines wiki page in section “To know its working” They did not mention any logging on harvester side. I have included a little change to config.yaml to log harvester actions.

harvester:

chia_ssl_ca:

crt: config/ssl/ca/chia_ca.crt

key: config/ssl/ca/chia_ca.key

farmer_peer:

host: IP.TO.FULL.NODE

port: 8447

logging: &id003

log_filename: log/debug-harvester.log

log_level: INFO

log_stdout: false

(Farming on many machines · Chia-Network/chia-blockchain Wiki · GitHub)

2000 plots in how many HDDs? and in what configuration

Right now I have about 20x16TB external USB, They are not full jet so around 100 plots on each drive. The drives are just as they are, no mods.

Just to clear the 23ms is average.

Most cases when we have a “1 plots were eligible” it’s average around 80ms.

If we have “0 plots were eligible” it only 3ms.

There are spikes up to 2sec.

It is much better then before, no more warning in the logs.

Please explain this - “I installed harvesters on all my plotters” ?

U just change string in config file - " host: IP.TO.FULL.NODE " ?

?

If i right understand, you have many PC and you connect HDD’s with differents plots to differents harvesters ? first pc = 500 plots, second PC = another 500 plots, etc… ? And change config file. Thats right?