Back in August I installed Unbuntu on a spare drive, and setup madmax, and successfully completed a plot in just under 28 minutes.

I’ve not used Linux since, but have decided to start replotting to NFT plots, and thought it would be quicker to use Linux, well it should be, but it isn’t.

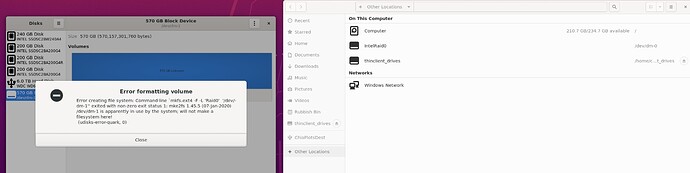

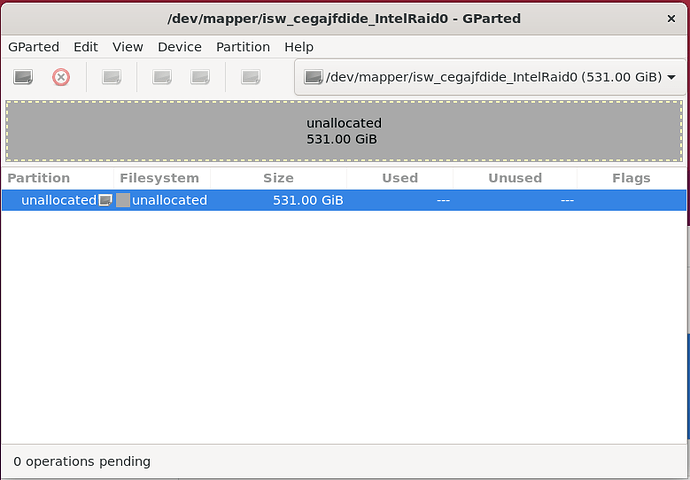

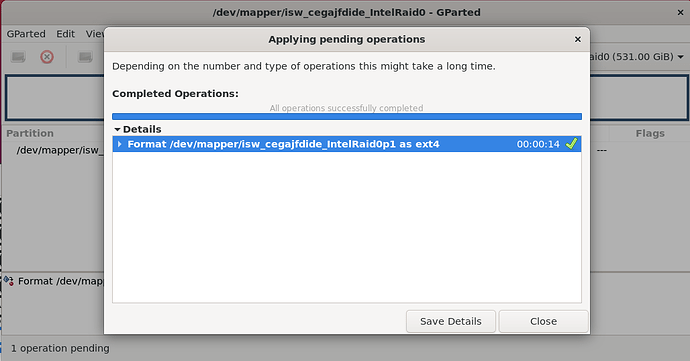

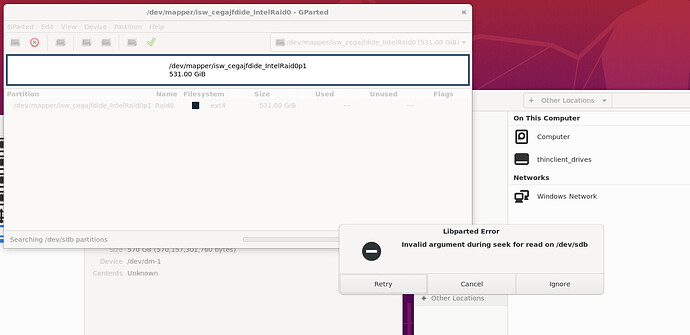

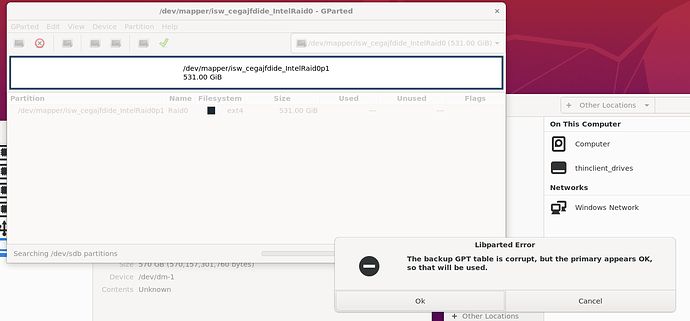

So I swapped back to my Linux disk, had an issue with mounting the Intel Raid 0 array, but sorted that out. And then set it going plotting, trouble is it now seems to take a very long time, and I’ve no idea why, or how to work out why.

Plot in August

Plot Name: plot-k32-2021-08-07-23-50-0cf995468c15e4502075760c8c771cf4e9786047933bd222c9e9d1896fcd7000

[P1] Table 1 took 10.3658 sec

[P1] Table 2 took 107.454 sec, found 4294972186 matches

[P1] Table 3 took 116.241 sec, found 4294939769 matches

[P1] Table 4 took 149.154 sec, found 4294857166 matches

[P1] Table 5 took 144.882 sec, found 4294837600 matches

[P1] Table 6 took 139.92 sec, found 4294693068 matches

[P1] Table 7 took 107.253 sec, found 4294323997 matches

Phase 1 took 775.284 sec

[P2] max_table_size = 4294972186

[P2] Table 7 scan took 10.1827 sec

[P2] Table 7 rewrite took 29.5417 sec, dropped 0 entries (0 %)

[P2] Table 6 scan took 24.6915 sec

[P2] Table 6 rewrite took 42.7078 sec, dropped 581312049 entries (13.5356 %)

[P2] Table 5 scan took 28.8993 sec

[P2] Table 5 rewrite took 53.7792 sec, dropped 761997452 entries (17.7422 %)

[P2] Table 4 scan took 31.5449 sec

[P2] Table 4 rewrite took 40.0991 sec, dropped 828829447 entries (19.2982 %)

[P2] Table 3 scan took 31.3667 sec

[P2] Table 3 rewrite took 39.7457 sec, dropped 855087655 entries (19.9092 %)

[P2] Table 2 scan took 33.8771 sec

[P2] Table 2 rewrite took 39.254 sec, dropped 865582831 entries (20.1534 %)

Phase 2 took 421.838 sec

Wrote plot header with 268 bytes

[P3-1] Table 2 took 23.9975 sec, wrote 3429389355 right entries

[P3-2] Table 2 took 26.8031 sec, wrote 3429389355 left entries, 3429389355 final

[P3-1] Table 3 took 41.4292 sec, wrote 3439852114 right entries

[P3-2] Table 3 took 27.4749 sec, wrote 3439852114 left entries, 3439852114 final

[P3-1] Table 4 took 43.1801 sec, wrote 3466027719 right entries

[P3-2] Table 4 took 27.0932 sec, wrote 3466027719 left entries, 3466027719 final

[P3-1] Table 5 took 43.9725 sec, wrote 3532840148 right entries

[P3-2] Table 5 took 28.2409 sec, wrote 3532840148 left entries, 3532840148 final

[P3-1] Table 6 took 45.5923 sec, wrote 3713381019 right entries

[P3-2] Table 6 took 29.0528 sec, wrote 3713381019 left entries, 3713381019 final

[P3-1] Table 7 took 29.2331 sec, wrote 4294323997 right entries

[P3-2] Table 7 took 34.4663 sec, wrote 4294323997 left entries, 4294323997 final

Phase 3 took 405.008 sec, wrote 21875814352 entries to final plot

[P4] Starting to write C1 and C3 tables

[P4] Finished writing C1 and C3 tables

[P4] Writing C2 table

[P4] Finished writing C2 table

Phase 4 took 70.1642 sec, final plot size is 108827243588 bytes

Total plot creation time was 1672.37 sec (27.8728 min)

Plot from today, first one I tried yesterday took over half an hour just for phase one. P1 Table one takes about the same time, after that it just goes so slooooooooowwwwwwww.

chiamining@chiamining-Precision-Tower-5810:~/chia-plotter/build$ ./chia_plot -k 32 -x 8444 -n 1 -r 18 -K 2 -u 256 -v 128 -G False -t /media/chiamining/IntelRaid0/ -2 /mnt/ramdisk/ -d /media/chiamining/ChiaPlotsDest/ -w -c -f

Multi-threaded pipelined Chia k32 plotter - 974d6e5

(Sponsored by Flexpool.io - Check them out if you’re looking for a secure and scalable Chia pool)Final Directory: /media/chiamining/ChiaPlotsDest/

Number of Plots: 1

Crafting plot 1 out of 1

Process ID: 4254

Number of Threads: 18

Number of Buckets P1: 2^8 (256)

Number of Buckets P3+P4: 2^7 (128)

Pool Puzzle Hash: d5ea399b658453fbc84bc52076a879ad3fa39524aa00539d9511ebbc2322acd6

Farmer Public Key: 88f4ae9716dedee91fa73cc13cc1073fb332380d7424c7308a43f84be2c88193bcf450be3501406dd55d3b5df24f7919

Working Directory: /media/chiamining/IntelRaid0/

Working Directory 2: /mnt/ramdisk/

Plot Name: plot-k32-2021-11-14-15-52-38712183cdd4e560a06b9d104ea6e032047d2b498a1b0d55431e9ecaf6bc0cf4

[P1] Table 1 took 10.333 sec

[P1] Table 2 took 230.803 sec, found 4295055912 matches

[P1] Table 3 took 316.503 sec, found 4295173653 matches

[P1] Table 4 took 344.627 sec, found 4295161896 matches

Being a Linux noob I’ve got no idea how to work out why its running so slow now. I’m using a 110GB ram disk, and a raid 0 array. Switching back to my Windows install a plot completes in 33 minutes.

CPU is Intel Xeon E5-2699 V3 18 CORE 2.30GHZ and 128GB of ram.