That recommended 5 secs is from times where there was no compression. Therefore, I think it is not really feasible to recommend it for those that have compressed plots.

My understanding is that all is OK if proofs are around or below 8 secs right now (new recommendation?). For my farm, having proof lookups around 7.5 secs, I see a couple of proofs around 18-20 secs, and about handful around 12-14 secs. As you mentioned that the max is 28 secs, it may be that the avg could push the avg by another 2-5 secs and still be below 28 secs. The worst thing is that GPU will get from time to time congested, and a couple of proofs will fail. Most likely the loss will still be smaller than from the missing signage points.

I also think that right now it is also important to watch the GPU utilization to not max it out (as the number of proofs found per slot will fluctuate, leading to a spillage of GPU load to the next slot/proof-check).

I guess, the bottom line is that that 5 secs before represented basically the HD access issues / load, where right now there is also the GPU load component needed to be considered (both point to different issues).

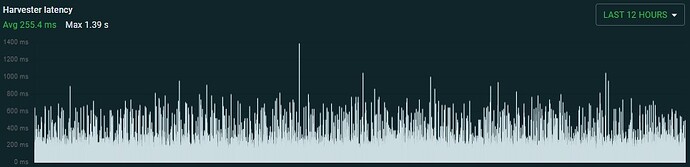

By the way, here is an example of my lookup times:

Searches:

Searches:

- total performed: 9237

- average: 5.50 sec, min: 0.43 sec, max: 13.35 sec

– 0-1 sec: [X_________] 0.15%, 14 occasions

– 1-2 sec: [X_________] 0.09%, 8 occasions

– 2-3 sec: [X_________] 2.10%, 194 occasions

– 3-4 sec: [XX________] 10.96%, 1012 occasions

– 4-5 sec: [XXX_______] 25.31%, 2338 occasions

– 5-6 sec: [XXX_______] 27.71%, 2560 occasions

– 6-7 sec: [XXX_______] 20.17%, 1863 occasions

– 7-8 sec: [X_________] 9.20%, 850 occasions

– 8-9 sec: [X_________] 3.11%, 287 occasions

– 9-10 sec: [X_________] 1.02%, 94 occasions

– 10+ sec: [X_________] 0.18%, 17 occasions

The average on that harvester was 5.50 secs (based on logs, but it correlates with GPU load). However, my pool reports it as ~7.5 secs. I am not sure where that extra 2 seconds is coming from. If I recall, the GPU is running at around 70%, but I still see some GPU load spillages when there are more proofs found.