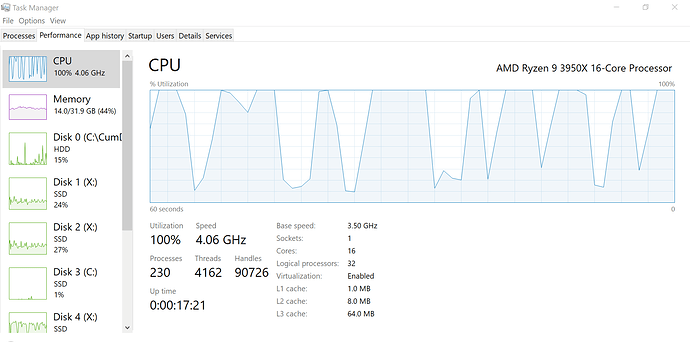

What could have gone wrong with my system?

CPU tempt merely 50-60c

Freshly installed Windows

No overclocking

m2 in use are 3x wd sn750se black raid0

Looks more like CPU saturation (100% utilization), not throttling with those clipped tops. Maybe time to consider a 5950X or accept what you got.

while plotting its supposed to be 100 all the way, getting high and low concurrently isn’t normal

Who told you that? If the CPU is not doing enough work (100% all the time), then something else, like your drives (RAID or not) perhaps are not up to the task. Point of fact, RAIDing SSDs typically either does not increase performance significantly or can makes it worse in some use situations. Have you tried just using one nvme or two as T1 & t2?

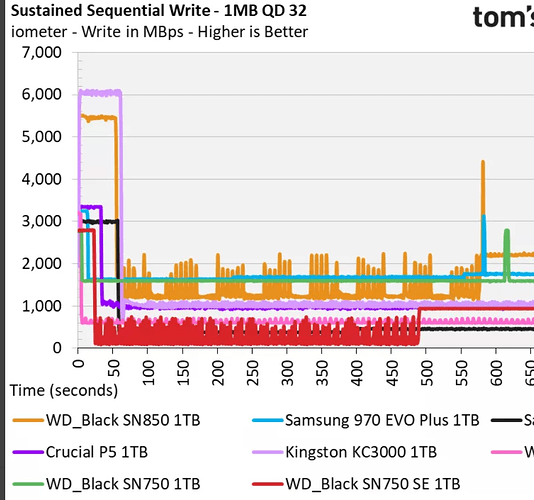

- Is the WD Black SN750 se good?

WD’s Black SN750 SE is a decent-performing gaming-oriented SSD. While it doesn’t deliver the best PCIe 4.0 performance, it competes well against many of the best PCIe 3.0 SSDs on the market and is listed at competitive prices.*

We noticed some peculiar behavior during sustained write testing that we believe is caused by the SN750 SE’s DRAM-less architecture. After absorbing roughly 70GB of data at 2.9 GBps, write speed plummeted to a very inconsistent average of 250 MBps, with lows landing below 100 MBps. After writing roughly an additional 120GB of data while the cache was folding, write speeds shot up to a consistent 990 MBps for the remainder of the test.

So this behaviour might very well cause that, although I’m not sure in any way.

I guess maybe the more important question is, what kind of plot times are you getting?

For reference: my 3900x plotting on a single SN750 (not se) got something like 26-28 minute plots.

I have a couple of WD SN 750, and those seem to me are as good as Samsung Evo Plus. Actually, maybe better, as those WDs runs cooler. I don’t really see a difference when plotting.

Although, both WDC and Samsung were doing BOM cost reduction with those NVMes, so maybe what I have (purchased a year ago) is not exactly the same as what is out there today.

Saying that, I would follow @Fuzeguy suggestion, and break that RAID, and use one for tmp1 and one for tmp2, and start from there. I have tried to RAID my NVMes few times (using either Win Disk Management or Linux mdadm), but never got results better than just running a single one. Although, my tmp2 is in RAM.

I would also try to use PrimoCache, and put it in front of tmp2 (no point of using it if tmp1/tmp2 are combined). You can use ~20 GB for it. Although, I would get at least another 32 GB RAM (if getting it up to 128 GB is out of the question).

Most consumer grade NVMe drives are great for nothing special use (office work, social media, gaming, etc). Chia plotting, however, is in a different category, where you need either a high-end consumer grade NVMe drive or commercial grade (data center grade) NVMe drive.

@Voodoo quoted a section from a Tom’s Hardware review.

Here is another part of that review:

“Official write specifications are only part of the performance picture. Most SSDs implement a write cache, which is a fast area of (usually) pseudo-SLC programmed flash that absorbs incoming data. Sustained write speeds can suffer tremendously once the workload spills outside of the cache and into the “native” TLC or QLC flash.”

Your sn750se NVMe drives are your bottleneck. When they are able to periodically feed your CPU, then your CPU usage goes up. Then, your CPU waits on your NVMe drives for more data (or, more likely, waits to write computed data to your NVMe drives).

NVMe drive specifications are always based on their faster cache, and never on the other 80% or 90% of the drive, which is slow.

Chia plotting quickly fills the cache, and then the NVMe performance drops, significantly.

Alas, the WD sn750se is not designed with Chia plotting in mind.

The only consumer grade NVMe drive that I am aware of that is good at Chia plotting is Samsung’s 980 Pro model.

It does slow down when its cache gets full. But not enough to starve the CPU (well, it depends on the CPU).

I use the 980 Pros with my 5950x, and they keep my CPU pinned nearly 100% of the time.

If I had a threadripper, or something faster, then I suspect that the 980 Pro would become a bottleneck, where a datacenter grade NVMe drive would be needed to keep the CPU busy enough.

Sorry, I missed that “se” from that WD NVMe. I have non-se SN 750, and those are comparable to Samsung 970 Evo Plus.

On the other hand, based on that chart from Tom’s Hardware (thanks to @Voodoo ) it looks like there is a problem with WD SN 750 SE version. On that chart, that is the outlier that for some reason has problems running Flash at “standard” speeds (500-1500 MBps). Although, the sustained speed settles down after about 8 minutes at “standard” level, what would suggest that either the controller they use is bad, or the firmware on that controller was old. Either way, that NVMe is clearly to be avoided.

On the other hand, once cache is exhausted, all those NVMes run roughly with the same speed, and that speed potentially reflects the Flash type (SLC/TLC/QLC) and/or Flash generation.

Also, what is worth to mention is that the chart reflects a sustained sequential writes using big chunks. Still, even the fastest NVMe in that chart is not touching 2,000 MBps, what is below PCIe 3/4 level.

Lastly, when plotting with MM, writes are not sequential, and with rather smaller chunks, thus causing significant sustained speed drops. So, as much as that chart is a good guide what to get, it may not reflect the actual performance of a given NVMe with MM.

There are many reasonable Chia plotting drives on the market. I’ve noticed it is the ssd controller that matters a lot. Samsung makes their own controllers, and it is a very good drive. In retail drives, any Phison PS5018-E18 based ssd is arguably going to have exemplary performance on PCI-E 4.0 systems when used in plotting. And there are others, research before buying. There are online reviews for most any decent drive you might want to buy.

I specially look for the type of testing @Voodoo presented above. Very telling in a snapshot.

I agree with all what you said. However, this one may be kind of misleading.

When you check that chart from Tom’s Hardware, the Flash speed for all those NVMes is well below PCIe 3 specs. So, if we assume that NVMe is just pure Flash, the PCIe 3 and 4 should have very similar sustained speeds. However, as every NVMe has a bit of non-Flash caching, the benefits of PCIe 4 basically only are taking advantage of that caching part. Once that cache is saturated, all those drives go back to that 500-1500 MBps speeds that purely depends on Flash generation / type (in optimal reads/writes - sequential, big chunks).

Of course, with newer Flash generations come higher TBW. So, that is kind of a plus for Chia. Although, this is also kind of not as we intuitively think about. On mechanical drive parts are being worn, so when they go beyond some threshold, such drives will just produce errors. On the other hand, Flash has two thresholds. One is gates leaking, the other gates damage. TBW is used for gates leaking, meaning that if there is no sustained voltage applied, cells will slowly discharge, thus data will be lost. However, when Flash is powered and used, that current leakage is not a problem for a long time. This is the reason that we can plot well beyond TBW specs provided.

There’s also the “1TB Samsung 970 pro” with MLC for sustained sequential write speed to consider perhaps?

Sure, that is also a good one.

Here is a quick description of those various Flash types - What Do SLC, MLC, TLC, and QLC Mean?. The bottom line is that when going down through those types, the endurance (gate leakage) drops, so the long term storage suffers. So, when buying something for your OS or data that you want to keep, it is good to try to get as close to single bit per cell as possible. For chia, the long term storage is rather irrelevant, so going a bit down the line may save some money. Although, the higher end is the CPU, the fastest Flash is needed, and again the fastest one is the SLC. But again, Flash generation also makes a difference.

I guess, what I am trying to say is that there are no technical miracles, and most what we hear is just PR stuff. On the other hand, it could be that some models, whether that is a good brand or not may be just horrible. That WDC SN 750 SE looks like one of those bottom level outliers. The fact that performance of that model is so unpredictable may also mean that RAID performance may be just horrible, as at any point one of those in the set may start acting up degrading speeds.

There are a lot of enterprise drives around at a reasonable price now, I just picked up a 1.6TB DC P3700 Intel Optane drive for £104, although bizarrely it was advertised as a 3600. Its health is at 96% with 1.7PB written, it has according to Intel 43.8 PBW endurance, or 24TBW a day for five years.

I had been using six Intel S3710 200GB drives in raid 0, but when I added a seventh it slowed down, these are rated at 3600TBW.

How do you RAID them? You use an HBA?

Yes, the T7910 has an onboard HBA with eight ports.

Thank you. So, it looks that RAID over SAS/SATA works fine (as expected - hardware accelerated), but it may be difficult to make it work over PCIe (for NVMes - host based).