Sure, glad I could help, I think the 980 Pro 1TB will do A LOT better then the FireCuda for this purpose, certainly fill up that CPU and memory and getting you fast plots. ![]() . On the PCIe cards, since you aren’t using any PCI slots, you have plenty of bandwidth and room available there, get 2 of these:

. On the PCIe cards, since you aren’t using any PCI slots, you have plenty of bandwidth and room available there, get 2 of these:

Although, as said, I believe those 2x 980Pro 1TB will serve you just fine already.

REVISED/UPDATE

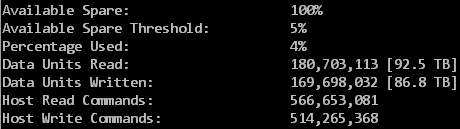

And the TBW, this is a Corsair Force MP510 “B” version, performing well, but only 600TBW rated. But the numbers:

So 86TB written is 4%? Then linearly that would be 2150TBW for this kind of workload. But that’s speculating a bit since I’m not 100% sure on those values yet and I also do not know if “percentage used” is linear or not.

In the end, let’s see how long this stuff lasts. I feel that if you are building a plotter you are going to run it until it breaks anyway. ![]()

p.s. Last thing, get some heatsinks for those things and a bit of airflow running over them! These drives can work sustained, but only if properly cooled (which isn’t a normal desktop load).