That’s an insane deal, congratulations! There are many deals to be had out there. I bet my hat that most of these drives will run for a decade at least.

Hi @enderTown , first of all thank you for sharing your project with the rest of the world. I am about to start my chia farm and was thinking about going the route of used server chassis and used server racks but now that I’ve read through this thread I think I am going to do it the way you are doing. I saw one of your comments at another thread showing your newest 3d design, do you plan on making that one publicly available too? Besides allowing hard drive sliding, is it better than the other one in any meaningful way? I am willing to pay for it.

Thank you! I’m not sure which thread you are talking about, but the latest is on thingiverse here: Wire Rack mount for 3.5" Hard Drive by joshpainter - Thingiverse (Reminder to change your password on Thingiverse if you haven’t already - they were hacked recently)

Hey all, an update on my project. I’ve decided to tear down the beast and move over to SAS enterprise JBODs after finding some amazing deals. When I started this project, these JBODs were at their price peak along with most other storage, so it made sense to try to save a bit of money with DIY. But after playing with “real” enterprise storage hardware, I probably won’t be going back, especially if I can keep finding good deals. I’ll be posting a new thread soon with my new petabyte+ SAS setup and everything I’ve learned from that. Here are some lessons learned to cap off this thread:

- 3D printed mounts are not great long term. This was my biggest problem. The plastic spring mounts that I designed would lose their tension after warming up - they’d warm up just enough to deform to their new position without tension, which left the drives loose in their slots. If I did it again, I’d figure out some kind of metal mount to the wire rack for the drives.

- Powering over a hundred drives is not easy. Even with three 1200 watt power supplies, I’d still have random disk disconnections that I could never figure out. I assumed it was because of power fluctuations as all hundreds of drives seek randomly. It also could have been my wiring - perhaps I shouldn’t have chained together so many SATA power splicers. Either way, the electrical engineering involved to make these drives perfectly happy was a bit above my head.

- Wiring is quite complex, especially with SATA port multipliers, which also need to be powered. Lots of manual, tedious work here in routing cables and power.

In the end, I realized that I didn’t want to spend any more time pretending that I’m an enterprise JBOD designer, especially when prices started coming down. It was a fantastic learning experience and now I definitely appreciate all the enterprise features that SAS brings, which I will detail in another post soon!

Thanks for reading this project and all the positive comments. I’m still available here to help or for advice if anyone wants to carry the baton forward!

What JBOD enclosure did you get?

Thanks for sharing. I’m looking forward to your bought-jbod solution!

I’ll create a new thread with more detail but I’m using old Dell SC200’s. Got 9 of them full of 4tb drives for a little over $3000 shipped - less than $7/TB all in! I replaced about half of the drives with 18tb/16tb SATA drives and they work great. Plus they daisy-chain to each other so cabling is a piece of cake!

That is one heck of a deal. I wish I had access to USA markets

This thread is a treasure for farming gear information, thanks for keeping it up

Are you going to mod the servers for lower noise emissions? That’s a big issue I’m expecting with buying and running an actual chia server.

Take a look at that thread:

That farmer/harvester was a very strong/clean setup with locally attached 500TB disk space. For whatever reason, Chia node couldn’t handle that load. Changing to Flex fixed the problem. And no, that was not dust storm related.

I would assume that with that amount of disk space you will be doing self-pooling, as such may not have the same feedback as pools provide (e.g., everything looks cool locally, still no wins).

Still, it would be nice to see, how much you can push it.

They are definitely loud! I was running them in my laundry room, but I’ve already run out of space in my 27U rack so I had to get a full 42U rack for the garage (wouldn’t fit in laundry room). I also installed 2 more 240v plugs in my garage under the breaker box to run everything. So noise won’t be as big of an issue for me since they are out of my living space now.

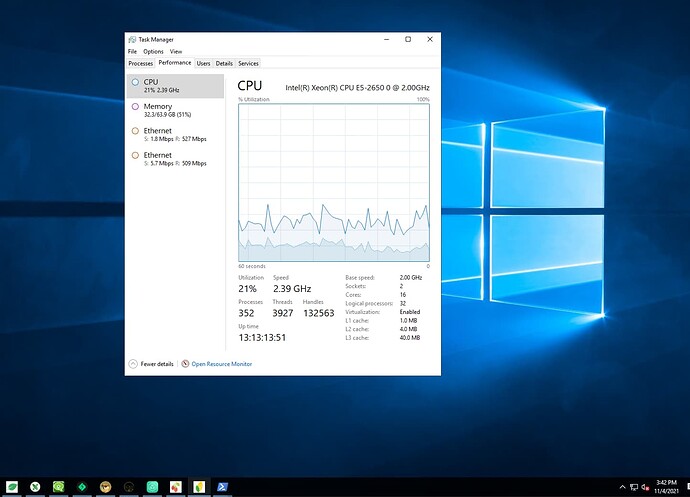

I’m not sure of the specifics of his setup or why he’d be having problems, but I’ve had no issues. I’m up to about 435tb in plots so far and adding another hundred or so a day. When I first set everything up and copied plots from drive to drive, I could get 8 file copies going at once, each with over 75MB/sec transfer. I’m using an old 8 lane 6gbps LSI SAS card in a Dell r720 with dual E5-2650’s, so 16 cores total. Only 64gb of RAM. I’m also running 8 forks alongside Chia. My lookup times are all sub-second. I’m actually looking at moving to an even older Dell r610 just because I think the r720 is overpowered for a farmer. The CPUs just twiddle their thumbs. ![]()

Liveshot:

The power consumption for your mb+ is mostly CPU and SAS/SATA controllers. Everything else is kind of in the same ballpark across different platforms. Maybe one option would be to switch to E5-2650L v2? It is 10 cores processor, but with a lower clock, thus draws a tad less power.

Are you plotting with them too or just harvesting? Are you still trying to run Storj and/or other storage networks in parallel?

Have the Chia forks paid out so far?

Just full nodes/farming/harvesting, no plotting. Although I have 3 other plotters that are constantly transferring plots over the network - you’ll notice the network traffic in the screenshot above showing an incoming plot.

Yep, still running Storj as well! Screenshot taken while Storj running in background.

I haven’t traded or sold anything yet - everything just goes to cold wallets. The forks might not ever be worth anything but I figure I might as well farm them since I have plenty of spare CPU cycles…

That washer dryer hookup thou

It’s impressive setup.

Can you guide me with something. Why are you running 16 vm for each forks ? I thought that we can run all the forks from single os setup or machine.

Yes, you certainly can run all forks from a single machine. Originally I was doing this for security - this was back when forks first came out and so I was concerned about running them on the same machine as my main farmer. I don’t run separate VMs anymore. Here are my latest updates: https://chiaforum.com/t/the-journey-to-one-petabyte/

Sorry to hijack or dig up this older thread, but I stumbled upon that discussion.

@enderTown since you have a lot of experience with those JMB585 Sata controllers on PCIe to Sata adaptors, as well as JMB575 1-to-5 Sata multiplier cards.

I recently bought the latter (ADP6ST0-J02 acc. to the PCB). I hooked it up to a (sacrificial  ) test system with just one old 500GB spare Sata drive at first to check whether it is working at all. I noticed right away that it got significantly hot to the touch. The tiny headspreader is glued on securely so I can’t easily check which chip is actually used. I assume JMB575. At least that’s what the box and description say.

) test system with just one old 500GB spare Sata drive at first to check whether it is working at all. I noticed right away that it got significantly hot to the touch. The tiny headspreader is glued on securely so I can’t easily check which chip is actually used. I assume JMB575. At least that’s what the box and description say.

Is it normal that those multipliers get that hot?

Did you hook them up via the 15 pin Sata connector or USB?

I then connected a secondary drive, but it wasn’t recognized in Windows. Any idea what I am doing wrong?

Thanks a lot in advance!

Those look to be the newer versions of the ones I have - mine don’t have SATA power connectors on them if you look at pics above and they also don’t have heat spreaders. I never noticed the chips getting very hot. I bet those newer versions are faster though!

As for the second drive not working, that usually means that your host SATA connection doesn’t support port multipliers. The first one is just passed through but the other 4 ports won’t work. Are you hooking it straight to the SATA ports on motherboard? Most motherboards don’t support PMs - you’ll need to get an add-in PCI card that supports them. See above for examples of those too!