Which “container” are you referring to?? you mean the docker one? I was under the impression it was still very beta at this stage

understanding if you are using data protection (RAID 5) on the NAS is key. It is not required for Chia and does NOT scale well with file sizes in hundreds of GB. There is a parity that has to be created when writing and any writes to the NAS will dramatically slow down the array (which is why I asked about background operations that these NAS software likes to kick off)

Says right there raid 0 stripe, with quite a few specifics. I also specified the filesystem in later posts which is bog standard ext4.

I like the idea of running harvesters but I am moving away from NASes entirely… the support folk on keybase said to avoid them, so I’m going to. Things were fine – and are fine RIGHT NOW – with only 3 nas devices active – as described above. When I added two more… it’s just too much variability in the system, I guess. I’m going to plain vanilla USB drives and eventually the 45-drive supermicro JBOD at the hosting provider.

I’m removing all the NASes from my environment, it’s taking some time to exfiltrate 90tb of data times ~3 (luckily only 2 were completely full)… If you want to buy a slightly used terramaster F5-422 for a good deal, PM me ![]()

One anecdotal data point: I’m running a full node with the official docker image on a Synology DS1019+ without any apparent issue. Only 30 plots so far, but so far so good.

![]()

![]() Here we are experimenting with hundreds of terabytes of data and 83 posts of troubleshooting but you’re got it solved for us with 3TB’s!! Bravo sir, bravo!

Here we are experimenting with hundreds of terabytes of data and 83 posts of troubleshooting but you’re got it solved for us with 3TB’s!! Bravo sir, bravo!

You expressed skepticism about running chia in a container using the official docker image; I thought you might appreciate a data point. Guess I was wrong.

@codinghorror might consider running harvesters directly on his F5-422s, since they seem to be capable of running containers (according to the amazon product page, anyway).

I agree! I want to solve this problem for everyone not just me! That’s why I’m making so much noise about it! I want to be helpful, not just “get mine”. I do hope that’s clear in my posts because it is a deeply held principle, core to my beliefs.

Absolutely!

My $0.02 on your issue, with the disclaimer that I’ve only been aware of Chia since you tweeted a link to this forum last week or so:

My gut says your mistake was relying on mounted network storage. It’s conceptually simple, but there’s plenty of complexity hiding behind the abstraction of “this thing looks like a locally mounted drive (but isn’t)”. I don’t know how NFS/Samba/etc are implemented - but it’s probable that their design assumes relatively small files that can be transferred whole (or in large chunks), and not for random access in gigantic opaque plots.

Chia’s architecture (as I understand it*) suggests that you want to distribute the harvesting work to be local to the plot storage.

If I were you, I wouldn’t give up on my NASes just yet. I’d spend a day fiddling with running harvesters locally on them. From some cursory searches, it looks like you install docker on your NASes, and SSH in to run docker commands. You ought to be able to run the official docker image as containers, with configuration to run as harvester-only, pointing at a central farmer. At least, that’s my understanding.

Yeah, but that’s… complicated. One central farmer is simple. And I very VERY much want to keep it simple.

Meanwhile I will obsess over my network config and those harvester proof “eligible” log matches to make sure I’m not going over 30s…

(and if anyone wants to live this “run-the-thing-on-the-NAS” dream, feel free to make an offer on these terramaster F5-422s… I’ll sell them cheap!)

I found this video very informative generally, and they discussed your issue specifically at the timestamp in this link:

That was interesting. Thanks! I watched the relevant portion. The highlights for me:

- 8 seeks for a quality check (per plot that passes the filter I’m assuming ?).

- 64 sequential seek for the final proof.

- The guy in the grey shirt mentions native command queuing as helping with that type of workload (lots of seeks).

- The guy in the green shirt mentions trying to parallelize the lookups a bit more, but doesn’t really go into detail about whether or not the 8 seeks for quality are sequential or parallel.

So, assuming the workload is fairly sequential, you’re paying the aggregate (sum total) penalty for all slow seeks to the NASes. It’s really bad if those 8 seeks for quality are sequential in the sense of going plot by plot because getting a challenge that includes plots on every NAS would mean a single slow NAS could ruin it for the whole challenge. There’s a fair bit of assumption on my part, but think of something roughly like this:

- Here’s the challenge…

- Ok. 10 plots pass the filter…

- Alright, let’s check these plots for quality:

- Check the 1st plot (hits NAS1)…

- Check the 2nd plot (hits NAS2)…

- Etc…

- Good times! Plot 6 passed the quality check.

- Perform the 64 seek proof for plot 6.

By adding NASes, you’d be increasing the odds of hitting a slow NAS (possibly more than once) on the section of indented bullet points. That adds up to a plausible scenario for me because it would explain why more NASes = more problems.

Then, if there’s an expectation of leaning on NCQ (native command queuing) to help sort out those seeks (they didn’t actually say that - it was just an off-the-cuff remark IMO), you get into the complexities of NCQ and mdadm. Wikipedia says there can be issues with RAID5 (no mention of RAID0). This ServerFault question asks about RAID0 performance on SSDs and gets told to check for NCQ errors. If NCQ is supported for mdadm RAID0 and passed to each disk and a single disk gets piled up for 1s, then what? Do you pay a “worst seek” penalty on a per-disk basis for RAID0? How long does it take to figure out if that’s even a thing? It would take me 1-2h and I know enough to skip past the poor quality information that doesn’t add up.

TLDR; It’s complicated and you get into what @codinghorror says:

If you wanted to diagnose it, and assuming it’s a NAS I/O type problem, you’d need to understand how mdadm works with the underlying disks in use, all of the mdadm options used by the NAS, the impact of NCQ in the context of RAID0 with mdadm, if the NAS is layering any other tech into the stack (like LVM) plus how it all works, and the exact way Chia actually performs it checks in the context of understanding when every hit to the disk/NAS occurs.

Then repeat the process for the network which will be equally complex.

If you come up with a plausible working theory, the vendors of those consumer grade devices will never be able to help you because (understandably) no one working support is going to have the expertise to verify a (complex, narrow) problem where it might have taken you 2 weeks of learning and testing just to come up with a working theory.

And yes, you could (maybe) make it work, but before you blink you’ll have 5 Docker containers (one per NAS) and 25 independent disk volumes which will require a significantly more complex plotting strategy if you want to maximize the ingestion rates of each NAS. Your (plot count on) disks will become unbalanced and require babysitting, you’ll have 5x the monitoring, 5x the updates, 5x the config (SSL, etc.), etc…

That’s the kind of thing that I would find fun to do in a testnet scenario, but the lost optimization / time during mainnet would drive me crazy, especially when I check every day and see my plot count going up while my win chance goes down and my wallet is unchanged.

That guy is me. FYI I had zero background on the issue haha. Also great work on the forum guys

Yeah this 30 second harvester limit thing is gonna start biting a LOT of people in the ass, very quickly as the number of plots grows – especially since it’s completely silent! The 30s timeout needs to be escalated to ERROR level like… immediately. The fact that it’s terminal, a deadly result that causes you to lose coins and is INFO level event… that’s… really bad!

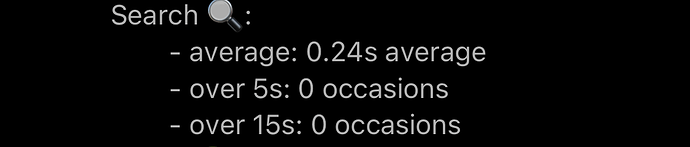

FYI latest release on chiadog from today will have a daily report on number of plot search time occasions that take more than 5 and 15 seconds. It also sends immediate alerts for anything > 20 seconds.

Great! Sorely needed! I spent 5 days in no-win purgatory due to this design flaw in the system! I hope it is fixed for everyone

Look what I found in my logs today

2021-04-27T18:24:30.907 harvester chia.harvester.harvester: INFO 8 plots were eligible for farming 65fd441b6b... Found 1 proofs. Time: 36.41071 s. Total 3213 plots

I’m desperately trying to move things off NAS into plain old USB… but in doing so… I’m adding load to the disks as I copy, so… mixed blessings I guess… sigh.

I had a really, funny thing to say about this video. I composed my reply and anxiously hit the reply button only to be greeted by this pop-up dialog form the forum software…

“Body seems unclear, is it a complete sentence?”

Hahahhahahahhahahah

That’s usually when you try to post in all caps …

That’s it! But it sorta needed to be in all caps, lol.

OMG - so you were 6 seconds away from glory? Soul crushing!