100% agreed. Chia team needs to hire a UX professional like NOW.

OMG!

As one last data point from me, I copied the last of my plots around today to even out my disks and I saw this popping up again. It wasn’t as extreme, but I have far fewer plots. However, on my main node where I normally see 1s or less, I had one check that took 31.5s. That was while copying a plot from an SSD to a (slow-ish) USB HDD.

The other more interesting one for me was copying plots from one SATA disk to another SATA disk in the same system.

1 plots were eligible for farming 4c41a81d0c... Found 0 proofs. Time: 14.82553 s. Total 276 plots

That’s one check taking 15s because I had the disk saturated copying a plot onto it. @zogstrip had similar results (small plot count, high check times), but to a NAS.

@andrew Did you ever get to try out ionice? That was a really good idea.

My farm is full (387 plots) and running a final plot check right now, so I won’t have much to add after this, but I agree that a 30s+ response to a challenge should be an ERROR in the logs. There’s an assumption you should never need more that 30s, so, if that’s a correct assumption, more than 30s is an error condition.

Actually, no. I haven’t been able to reproduce my rare occurrences of > 30 second checks, despite still farming on disks having finished plots copied to pretty frequently, so not sure what was going on there originally when I reported slow checks. The longest any of my checks have been taking the last 24 hours are like 2 seconds.

TBH, I couldn’t get much consistency either. I copied 48 plots onto 12 disks (4 per disk) on that machine and that’s the only one I got that was above 10s. They were 2TB disks, so only had 14-17 plots each, but that should be about a 3% chance to pass the filter for a disk and there would be 192 (4608 per day / 24h) challenges in the hour it takes to copy those files. It seems unlikely I did that 12 times and only had one challenge to a completely saturated disk. I had a lot in the 2-5s range, but that’s ok IMO. I’m not positive I got that math right.

So, for me, there must be some other contributing factor for that one slow check. I searched all the logs on my system hoping for a hint, but I couldn’t find anything. I think it’s probably reasonable to tolerate the odd slow check for disks that aren’t idle, but if it happens more that once or twice every day or two I think the GUI should flag it.

It’ll be tough though because I agree with the sentiment there are going to be a decent number of people affected while they’re plotting and they just don’t realize it right now. If you look at all the people on Reddit posting their screenshots of winning with <30 plots, it’s not hard to imagine a scenario where the total number of people that found proofs but were too slow ends up looking bad because all of them will post about it.

I feel for the developers on this one. As soon as (or if) they start surfacing this as an error, they’re going to be inundated with all kinds of complaints like “the software totally fails on my disks that are tied to a pole, connected with spliced together cables, and cooled by the neighbors air conditioner exhaust. WTF guys!!”

Lol.

If it helps anyone - I use a simple shell/GNUplot script refreshing every 5 mins to monitor my challenge times (is that the correct term?), and correlate them with any changes in my hardware/network, example:

Above:

- At ~08:00 - I added a new HDD and started copying plots to it.

- At 11:00 - I ionice’d the rsync process to see if it made any difference - which apparently it did not!

I’m trying to get GNUplot to cooperate in plotting a cumulative distribution of the times for a time window (most the the points are on top of each over in my case, which make it hard to see how many are there) - or multiple time windows on the same plot, e.g. hourly, but having trouble with the syntax so far.

times.sh:

#!/bin/bash

cat /home/chia/.chia/mainnet/log/debug.log* \

| grep "harvester chia.harvester.harvester" \

| sed 's/^\([^ ]\+\).*Time: \([0-9.]\+\) s.*/\1 \2/' \

> times.txt

gnuplot times.gp

display times.png

times.gp:

set terminal png size 600,200

set output 'times.png'

set style data points

set key box top left

set xdata time

set xlabel "time (UTC)"

set ylabel "challenge time (s)

set timefmt '%Y-%m-%dT%H:%M:%S'

set style line 1 lc rgb '#2080c0' pt 1 ps 0.5

plot "times.txt" u 1:2 t 'challenge' w p ls 1This change can be made in the yaml config file .chia\mainnet\config

details here: Private Site

I have 3 pc with one wallet (plotting and farming on all). How i can turn all my pc’s to full node?

Please, explain.

Also, i must copy all plots to every full node?

I just read through this entire thread because I am having the same problem and issue on my farm which is over 5 Synology Devices, 2,400 plots. Chiadog reports seek times of 20-40 seconds. I have all plots dumped in one folder.

Has anyone found a cure? I do not want to abandon my Synology setup. They are very quiet, efficient, and organized.

I am sad to say, no. I will tell you that “all plots in one folder” was VERY bad mojo for me, so the first thing I would recommend is that you split it up into multiple folders. When I switched from

one

folder per 90tb NAS

to

five

folders per 90tb NAS

things immediately improved a lot. But ultimately the NASes are little chaos machines on the network, and I’m getting rid of all mine and going for straight up simple “tons of USB attached disks”.

Shit dude that does not sound very scalable. Even with 18TB externals you are going to look like a scene out of the Matrix growing plot babies in a lab.

@codinghorror i cannot find where i saw this originally but one of the chia experts/staffers on keybase recommended a “when all else fails” solution. this was sometime late last year. he said to delete your chia folder located at C:\Users"insert username".chia and force a fresh blockchain download. the reasoning behind it was there may be something corrupted that is not visible or easy to find and this would help the person he was chatting with over there.

i’m only farming 657 plots and up until last week i was winning two XCH every other day consistently since the mainnet launch. since last week nothing. i’m in the process of downloading the blockchain again myself to see if this resolves the issue. i’ve checked everything just like you and all signs point to a successful farm operation. i don’t know what else to do other than this. i’ll report back if this helps me.

i’ll add one point so people who try this step don’t panic and put a hex on me.  when you delete your chia file it will show a “0” for the XCH you earned. again, don’t panic. once you sync the blockchain, your XCH will appear as you get to the height you earned the coins. i know this because i had a slight coronary when i did it this morning.

when you delete your chia file it will show a “0” for the XCH you earned. again, don’t panic. once you sync the blockchain, your XCH will appear as you get to the height you earned the coins. i know this because i had a slight coronary when i did it this morning.

Isn’t being verifiable the whole point of a blockchain? I sure hope that was only for testnet or before it was considered stable.

@ryan it appeared the solution was for the specific user machine instance, not that the blockchain was at fault. i have no idea if this would help @codinghorror, just throwing it out there since we’re exhausting all possibilities.

To summarize TL;DR here’s what I think ought to happen:

- Make it log ERROR when harvester takes more than 30s to return. Right now it is INFO and that’s… deeply uncool.

- Surface this harvester time in the GUI, perhaps only if it’s taking longer than 5s so people can be aware if they’re slipping down that slippery slope?

- Tell us which specific plots the harvester is picking so we can isolate problem devices.

I’m also curious if this 30 second harvester limit is truly written in stone; I think relaxing it to something like 60 seconds would be quite helpful long term, but I’m not in charge of the project!

Have you checked MTU settings to ensure they’re matching on client/NAS?

Also, there is some new caching “feature” in SMB 3; this was causing us issues with a lot of files in a single directory taking forever to open. You could try falling back to SMB 2/1 to see if that makes any difference?

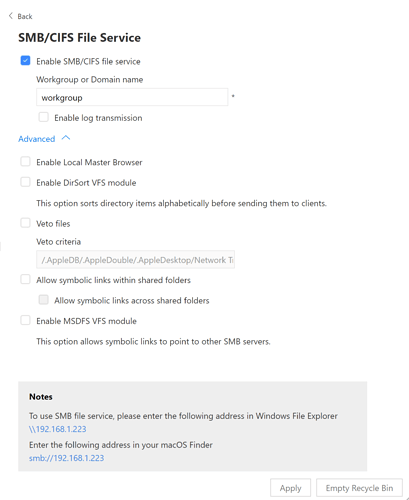

I don’t know; these are the only options provided for SMB/CIFS

I can 100% tell you that “all the files in one giant 90tb folder” was a disaster… the minute I switched from that to 5 folders per 90tb NAS there was a dramatic improvement. However, it did not magically fix the problem altogether, even when I went to 15 folders per NAS.

(This is all on RAID 0, so it should be fast in theory…)

One bit of anecdotal evidence regarding proof times. I have several remote harvesters each with about 10-20 TB and about 50TB directly connected to my full node, which is a very powerful iMac Pro. I was seeing proof times on the harvesters as well as the full node well below 1 second with this system.

BUT - I was also plotting on the Full Node while it was serving as the remote farmer for my harvesters. CPU load was hovering around 50% so I figured I was fine in terms of being able to respond to proof challenges.

I ran out of space to do additional plotting on the full node so it’s just sitting there as a farmer now.

I went over 2 weeks without a win while the Full Node was also plotting. After stopping the simultaneous plotting on the full node a few days ago I’ve won 3 times.

I’m sure this is entirely coincidental, but it seems to me there’s something going on with my ability to win while the Farmer CPU is under load. No idea why this would be, but there it is.

Hmm. Unless you’re seeing in increase in harvester times, I don’t know that I’d go by that. Set your log level to info and grep for

^.*?eligible.*?$

That’ll give you just the eligibility proofs from the harvester.

I did eventually bite the bullet and split to 3 GUI farmers:

-

on my main phantom canyon NUC, to all USB drives connected to it and three network share 18tb dump drives

-

on my AMD Ryzen plotter, to all USB drives connected to it, plus its own 7 drives connected via mobo SATA (actually 8 but one is used for the OS)

-

on an intel 10th gen NUC, just to the two terra-master NAS’es left; I’ve managed to slowly, painfully extract 90tb from the other two full ones on the network as of today.

I have port forwarded 8444 to the primary machine (#1 above) and turned unpnp off in the config.yaml on #2 and #3. I really wanted to avoid doing this but I felt like I had no choice, my harvester times, even with removed NASes, were all over the place…

This lets me compare harvester proof times on all 3 machines, as log level is set to INFO on all 3.

We’re going to have to start adding Chia network architect to our resumes!