Thanks for your answer.

I have been ploting with -r 30, -u 256, on 5950X (stock - no OC), 32GB 4GHz RAM, -t 970 EVO PLUS 1TB, -2 MP600 2TB about 38 minutes a plot. I will try -r 16 and report back.

Going to give these a try. I just added a few mount flags related to this and it seems to help.

One weird thing i have: 5900 with wd750+samsung980pro likes XFS more than EXT4, 5950x with 3x2d750 likes ext4

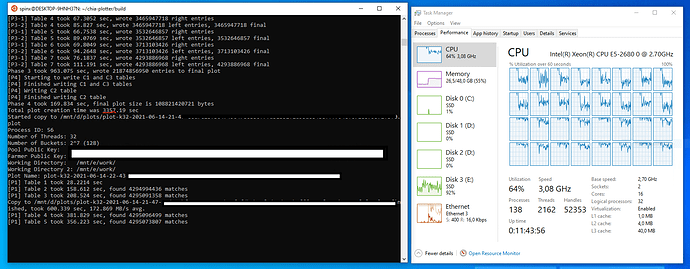

I’d like to compare notes too. I have duel xeon E5-2650V2 320GB ram, so I am using ramdrive for temp2 and SAS (single drive) as temp1. It doesn’t quite max the processor so I am staggering two processes and producing around two plots every 14000 seconds, so this is about 12-14 plots per day.

When I am back at work where the servers are, I will reconfigure some of my SAS drives to raid 0 two disk striped. I think this will help. I am not planning to use any SSD’s/NVMEs but its tempting to install a PCIe NVME.

For me plotting 7-10 parallel to seperate SAS drives (no NVMEs or SSDs are being harmed in the making of these plots) taking 16 hours, or plotting 2 madmax processes in parallel results in the same number of plots per day.

The advantage for me is that I can interupt the process every 2-3 hours rather than eveyr 16 if I need to.

There isn’t enough data posted to verify this isn’t a fake.

Will be interested to hear your results. If I were to guess, I would say the difference in “preference” of FS might be related to discard behavior? Are you mounting with or without discard? I’m barely touching my SSDs so I haven’t tested, but what I would suspect would work best is: Mount without discard, and do manual fstrim in between plot runs.

I mount without discard and have a cron job for trim every hour.

With your configuration, I’d suggest trying a configuration I mentioned above:

- Run both tmp1 and tmp2 in tmpfs, with a 256GB tmpfs

- Just run 1 at a time since you’re buffering the entire thing in ram

I’m able to get sub 1500s plots with dual E5-2690v2 and 256GB of ram. I would imagine you can get around 2000s plot times or thereabouts.

With 320GB of ram, you don’t need NVMe, and you’re better off running one at a time and just using ramdisk for everything.

Thanks, I did try intiially with everything in ramdrive temp1/2 but was still only getting about 10000 second plots. I am in windows and using DDR3 ram, which I assumed just wasn’t all that fast.

Ah yes, part of my theory is that the linux tmpfs implementation of a ramdisk is much more efficient than the 3rd party windows ramdisks. I’m using DDR3 ram as well, and getting the 1500s plot times I mentioned, so it’s not a ram speed issue, it’s likely the windows implementation. Which windows ramdisk are you using?

Do they farm for you, personally as privately farmed plots? They should do. If so farm them. Using them for HPOOL I know nothing about. You might be replotting once official pools come out anyway, along with the rest of us.

I’ve tried ImDisk and OSFMount. Not much in it, maybe ImDisk was faster by 5% so I switch back to it. I might switch to linux, until pool plots come out, my personal plots are basically an experiment anyway. I’ve spent some time using raspberry pi’s so can probably find my way around Ubuntu.

Have you tried to run MadMax using the ubuntu app for windows? That way you can use the linux version of the RAMDrive mapping. Here is a quick set of instructions on how to set it up. That is what I am using. Works well. (I don’t have RAMDrive yet. I will at the end of the week)

Some quick stats from one of my budget-builds:

2x Xeon 2680v1 (total 16c32t)

48GB DDR3

1x Corsair MP600 NVME

Creating a plot every 3300s ~26 a day.

Total cost of hardware was ~350$

5900x+64GB 3200Mhz+( wd750 2TB+980pro 2TB ) mdadm raid0 XFS: ~1450s (22 threads 512 buckets)

5950x+64GB 3733Mhz+( 3 x wd750 2TB ) mdadm raid0 EXT4 ~1300s (30 threads 512 buckets)

Feels like the 5950x could be doing a bit better when you see how great the 5900x does with 1 less drive and 4 less cores. CPU isn’t being fully utilized, most of the time i see at least 30% idle.

Yes - I agree it should probably be able to do better. If trying to optimize, I’d probably test doing single WD750 for tmp1, and 2x WD750 raid0 for tmp2. Are you using discard mount option? I’ve theorized that disabling discard mount option and doing manual fstrim between plot runs would be the best approach.

Already manually discarding every hour with cron job.

I have done few tests. My setup: AMD 5950X (OC by CTR 2.1), 32GB 4GHz RAM, -t Samsung 970 EVO PLUS 1TB, -2 Corsair MP600 2TB.

-r 16, -u 256: 1556 sec ( ~25.94 minutes),

-r 30, -u 256: 1523 sec ( ~25.38 minutes),

-r 32, -u 256: 1517 sec ( ~25.28 minutes).

- Overclocking your processor can really make a difference (no OC ~38 minutes, OC using CTR 2.1 ~25 minutes). EDIT: I have also trimed my drives and deleted about 300GB, thus performance was affected. The difference ended up about 1-2 minutes, still I would recommend CTR because you can lower your power consumption and generated heat and also boost your performance a little bit).

There’s no way you gained 52% performance from overclocking a 5950x unless you literally submerged it in nitrogen, something was wrong on your first run.

Maybe it was more aimed at the many disks parallel plotting but I tried two madmax processes in parallel with Primocache and without it, made no difference.