ahh okay so maybe that’s why the pcie x1 is acting up and my friend said that the pcie x4 card is working for him.

they’re old motherboards, h61 h110 and well even on a newer Z270 it still causes the same problems…

ahh okay so maybe that’s why the pcie x1 is acting up and my friend said that the pcie x4 card is working for him.

they’re old motherboards, h61 h110 and well even on a newer Z270 it still causes the same problems…

How did you do this? Did you just hit ‘add plot’ a bunch of time and queue up batches of 2 at a time with some offsets until it looked like the next few days/weeks of work time was filled up?

OH - gotcha, sorry I missed that detail. So officially my answer would be “ego/OCD and illogical desire to have the build ‘super maxed out’ - also I didn’t think about getting a SATA Riser card”

What I currently do is add the plots 8 at a time with a 6 hour offset for the plots on the WD Black drive and 8 at a time with a 7 hour offset for the Firecuda drive. I manually set up the queues. The total amount of time I set them to run I have to work out with how long it takes to move the plotted space around. At this point my main concern was to make sure I was at least sustaining 6 plots at a time.

(unpacking this in my mind) ok so on the ‘Add Plot’ screen, this means you are selecting “Parallel Plotting”, with 8 Plots selected in the drop down and then down in the delayed start box you are putting, how many minutes? 60?

And then you are setting the temp drive to 1 of your plotting drives and hitting ‘Add Plot’ - then doing that again for the other drive I suppose.

so that fires off the plotting, but then how are you queuing up work behind that? or do you wait for all that to finish before bringing thos edialogs up again and configuring more?

You really need a plot manager. You will quickly figure out that trying to do this via the GUI will not work the way you think it will. Check out Swar’s Plot Manager. It is easy to setup and use. You will be grateful you did.

I’m 4hrs into 4 parallel plots and it’s killing me sitting here watching the computer basically do nothing, very slowly.

You are 100% right and I just saw that in your other thread - I’ll take a peek, thanks bro!

Just to let you know, that if you plug an x1 card into an x1 slot, on just about every non-server Intel board, it pulls the adjacent x4 or x8 or x16 slot down to x1. And if you plug an x1 card in to an x16 slot, it pulls down all slots (x4, x8, and x16) on that bank, and sometimes all slots if your motherboard is wired up that way. If you plug an x1 in to any slot connected directly to the CPU it will also pull the DMI down to x1, which shuts down on-board Ethernet, WiFi, USB, SATA, NVMe M.2, and so forth. Why? Because FUCK YOU!! that’s why or in Intel’s own manifesto “You want more PCIe lanes, buy a server CPU” which is a bit like saying “nobody would ever need more than 640KB of RAM.” Another part of the problem is how the motherboard PCIe switch works, some BIOS’ will expose control of it and let you switch slots between different capabilities, or bifurcate them, but many do not. Check your BIOS to see if you can explicitly set an x4 or x16 slot to be an x1 slot, if you can, then you should do that.

I have a C621 chipset on my motherboard. It just keeps on trucking when you plug in funky h/w.

This doesn’t effect me, but I love knowing stuff like this - I had no idea the PCIe slots behaved like that… thanks for typing all of that up!

any chart of the "Sequential steady state write workload’ for the Samsung 980? or your opinion?

Thanks for this great info. I bought Baracude Q5. Put them in raid 0 (2x1TB)

Plotting with 3600x and 32gb mem.

Used 60 minutes between the starting of the plots. Read and write speeds are Less than 80mb/s.

Should have seen this thread earlier.

I will try 120 minutes and see what it does.

Maybe remove the raid0 on the nvme and use 3 and 3 threads separate and to separate final drives.

Anybody an idea.

Thanks fot the great tips over here!

I have pretty much the same setup over here, as I used your specs to build my plotter.

What I found out over the last week was also a delay of 245 Minutes while starting 4 plots at the same time seems to be the best static setup.

Using a plotmanager as WolfGT stated gave me a slightly better result - max 10 plots and max 3 plots in phase one using 5 threads. The 10 plots are distributed in a way that most of the time you only have 9 plots running (as 3 plots per phase 1 is the bottleneck) - never had an issue with the temp storage. The result is varying per day (sometimes there is a overlap from the day before) between min. 19/ppd and 23/ppd

What did you find when looking it up?

p.s. Do NOT use that drive, it’s a QLC drive and next to horrible performance you’ll also burn through it pretty quickly…

Oh dude I’m flattered and happy the post has helped! Exciting!

Do we have the same CPU and 2x 1TB Samsung 980 Pro’s in the plotter build? If so, I just did this analysis last night and captured some of what I found with our setups here:

So the end result is more waterfall than popping 4 parallel plots every 4hrs like you mention, BUT, I don’t know if it’s necessarily better.

The one thing I can’t have though is more than 7 plots exist on the 2TB RAID 0 I plot on, because then the disk fills and ALL threads lock up waiting for space to appear (they check every 5mins).

So with your approach, I’d be really nervous about that 8th plot coming into existence before the 1st plot had a chance to move off the disk and the temp files cleaned up.

BUT if you’ve been running this just fine, then I guess it works and I’ll need to dial my timings in a bit here.

this must have been what happened to me, now I’ve found the answer, thank you for this

Hello and first of all thank you for taking the time to answer.

I am glad I found your setup to get my feets wet on the whole mining topic. My plotter is running on a i7 11700k with two 1TB 980 Pros and 64GB RAM - so at the time I got my hardware it was exactly what was up to date in this post.

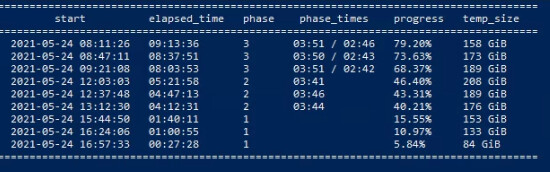

I was a bit unclear with my first post as I did not know it was really getting attention ![]() here is a screenshot of what is happening while plotting

here is a screenshot of what is happening while plotting

The two 980 pros are in a storage pool so there is 1.8TiB to store on. While taking the screenshot above there is still 366GiB of free storage - it seems to be because of the staggering during the phases.

Getting all four plots exactly at the same time also seemed to be slower then having them 15 minutes apart. As I am still playing arround with the threads asigned to the plots during phase 1 I am now using 5 threads with 3 plots and a minimum of 15 minutes delay. What you see above is that the plotmanager does it job by itself and this is why the distance regading the starting time is not equal to 15 minutes anymore. (Max 10 total-plots, max 3 phase-1-plots)

Maybe my screenshot gets us on a good way to improve both of our settings ![]()

Twinsies!

re:screenshot - are those ‘elapsed time’ in hh:mm:ss? For example, the top one has been running for just over 9hrs and is on Phase 3 of a plot?

Very interesting - please keep an eye on it and let me know if the 1st one completes and another one queues up just fine… what I saw when I was plotting is during Phase 1, all the disk space getting consumed and it wasn’t until the end of the Copy operation when the .tmp files were cleaned up that space become available again. So one time I ran 8 parallel plots and about 2hrs in, the disk filled up, 0 space left and all plots froze so I killed them all and started over.

Ah - ok I haven’t messed with non-default Thread or Memory-per-plot assignments. If you do some digging in the forums I think there are confirmations about how much that helps or doesn’t help. (from what I remember it wasn’t amazing)

Yep I noticed something similar, firing up 6+ plots at the same time, caused the runtimes to double over a single-plot baseline I’m tracking.

What do you mean “by itself” - doesn’t it just follow whatever rules you setup in the config?