Hey everybody, it’s been awhile since I posted an update for my DIY JBOD setup. I last mentioned in that thread that I had come across a great deal on some Dell SC200 SAS enclosures and drives, so I was working on tearing down my DIY setup to move everything over. I wanted to detail my experiences here in a new thread in the hopes that it helps other farmers out there. Questions and feedback welcome!

My DIY setup was up to over 400tb and was made up of about 130 refurbished server SATA 4tb drives that I got for around $10/tb. It was still running great and I think I could continue scaling it, but the deal I found on the SAS enclosures changed my perspective and journey quite a bit.

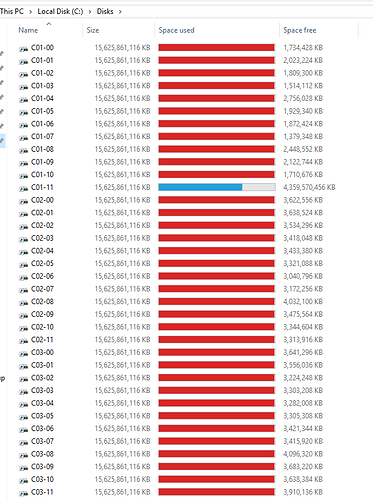

I purchased 9 Dell SC200 enclosures, each full of twelve 4tb SAS drives, for a little over $3200 shipped. This basically doubled my entire capacity for around $7.50/tb. I had never played with SAS drives before, but I already had a Dell PowerEdge server that had external SAS ports, so I plugged it all up and they “just worked!” I had to flash my SAS HBA to IT mode so it would just pass all the drives through to the operating system, but other than that it was just like connecting a bunch of SATA drives.

Once I learned how easy and clean SAS is compared to my DIY SATA setup, there was no looking back. I still had over 130 4tb SATA drives, so I purchased 6 more Dell SC200’s on ebay (get them empty for about $150/each but don’t forget caddies). These enclosures work great for SAS or SATA and you can mix/match in the same enclosure. These enclosures also daisy chain to each other, so you have a single SAS cable coming out of the server going to the first enclosure. Very clean!

Now I had 15 of these 2U enclosures, so I needed a server rack! I found a nice one on Facebook Marketplace about an hour away for a couple hundred bucks and it even came with several useful rails.

My long-term goal is to replace the smaller 4tb drives with larger drives as the cost per TB comes down, of course. My next unexpected deal came soon after I had these all set up - I paid $12,000 total for 49 brand new 16tb Seagate drives along with 12 brand new 18tb Seagate drives. This turned out to be exactly 1,000 tb at a cost of $12/tb for brand new high-capacity drives. I couldn’t refuse! This deal was an in-person Bitcoin transaction - by far my largest to date. It went absolutely flawlessly, cost only a few bucks in fees and cemented crypto as the future of payment in my mind!

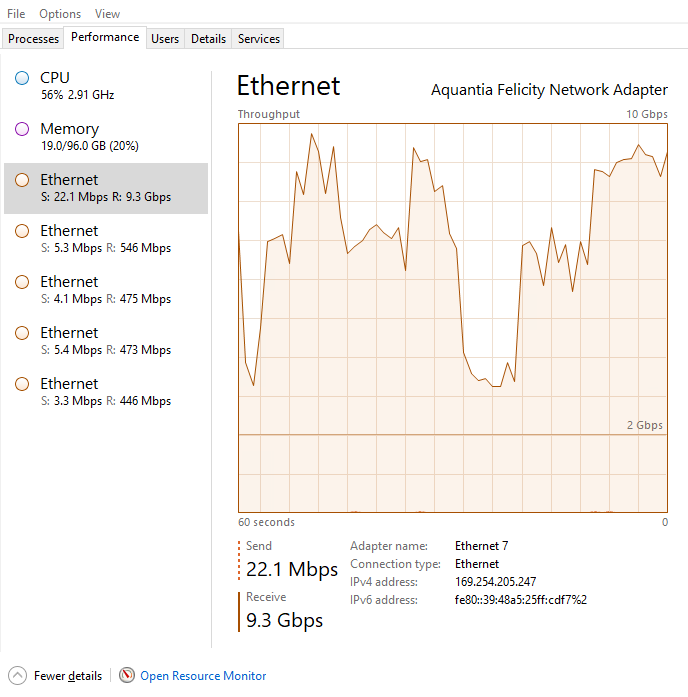

I began the slow process of replacing the 4tb drives with their larger brothers. It takes literally weeks to copy over 400tb of data one plot at a time, but because of the multi-channel features of SAS I was able to cut this down to a few days by running twelve file-copies at once. The server continued farming even during these massive file copies and suffered no negative performance impact - another testament to the performance of SAS!

With all high-capacity drives installed along with 4tb drives filling out the rest of the enclosures, I now have about 1.5pb of space total! I suddenly realized that I had no plan on how to actually fill all this space - I’ve been plotting since April and I’ve only managed to fill a little over 400tb. At current speeds, it would take another year to fill all this space! I needed more plotting power…back to deal search!

I found an auction for what looked like an HP c7000 BladeSystem chassis. It was mislabeled and only had a single picture of the back of the chassis, so I assumed it was just the empty chassis. Still these can cost $1000 used for just the chassis, not including any actual blade servers. These chassis can hold 16 HP blade servers and they are compatible with several generations of HP blade servers, including Gen 9 and 10, the two latest generations. They have 10gb internal network connections and have all kinds of fun connectivity options using the 8 “interconnect” slots on the back that can be mapped to the blade servers in the front. These are basically “data centers in a box” - just slide your blade in and it’s connected and ready to go.

I won the auction for a little over $200. The freight actually cost more than the auction - this thing weighs in at almost 400 pounds empty. But I thought it’d be a great base from which to build my plotting empire.

I received the chassis and could not believe my luck - slotted into the front were SEVEN Gen 9 blade servers!!! Not only that, these things were almost maxed out. All of them have dual E5-2695 v3 14-core Xeons - that’s 28 cores each and 56 threads. Four of them had 384gb RAM and the other 3 had 256gb RAM. This setup originally cost over $100k easily just a few years ago…what an incredible deal! I thought seriously about just giving up on Chia and cashing out right then!! ![]()

I swapped memory around so that 4 of the blades now have 448gb of RAM - perfect for Bladebit. These monsters pump out a plot every 15 minutes. I literally can’t write the plots to the disks fast enough over the network - they spend a lot of time waiting. While they wait, the CPUs mine Raptoreum - more on that later.

Two of the other blades are currently plotting Chives plots using Madmax and ramdisks. They can pump out the smaller plots in 10-30 minutes as well. Once I’m happy with my Chives plots I’ll put them back on Chia plotting.

The last blade is my farmer, currently running almost 20 forks. The big disk array is not currently directly connected to the blade chassis while I figure out SAS connectivity. My old PowerEdge is still running the 15 SC200’s as a pure harvester. Once I figure out SAS connectivity, the farmer will directly connect to the array.

Whew! Ok, time for the picture:

From bottom up:

- The HP c7000 chassis with 7 BL460C G9 blade servers

- Power Edge 1u r610 running the array above

- 15 Dell SC200s (bottom few still need caddies)

- Bonus shot of Eth mining rig in right background along with two laptops busy plotting as well

I’ve moved from the laundry room out to the garage after outgrowing the space and the electric outlet. I installed two 240v outlets below my breaker box, each on their own breaker. Total electric usage of just farming is a little under 1kw. Total with all blades maxed out plotting is closer to 3kw. I leave the door between garage and house open and let it heat the house during the cold right now - we haven’t turned on the heater yet! ![]()

Any questions, just ask! Also looking for improvement suggestions or any experts on HP blade server hardware/software. I’ve been stumbling my way through it but still have lots of questions!!

!

!